A#

Abductive Reasoning#

Abductive reasoning is a type of reasoning where a conclusion is drawn based on the best explanation for a given set of observations. It involves considering different hypotheses and selecting the most likely or best explanation based on the available evidence. Abductive reasoning is used to make educated guesses or hypotheses when faced with incomplete or uncertain information. For example, observing a car that cannot start and a puddle of liquid under the engine, and concluding that the most likely explanation is a leak in the radiator.

Note

More at:

- LLM reasoning ability - https://www.kaggle.com/code/flaussy/large-language-models-reasoning-ability

Ablation#

Accuracy#

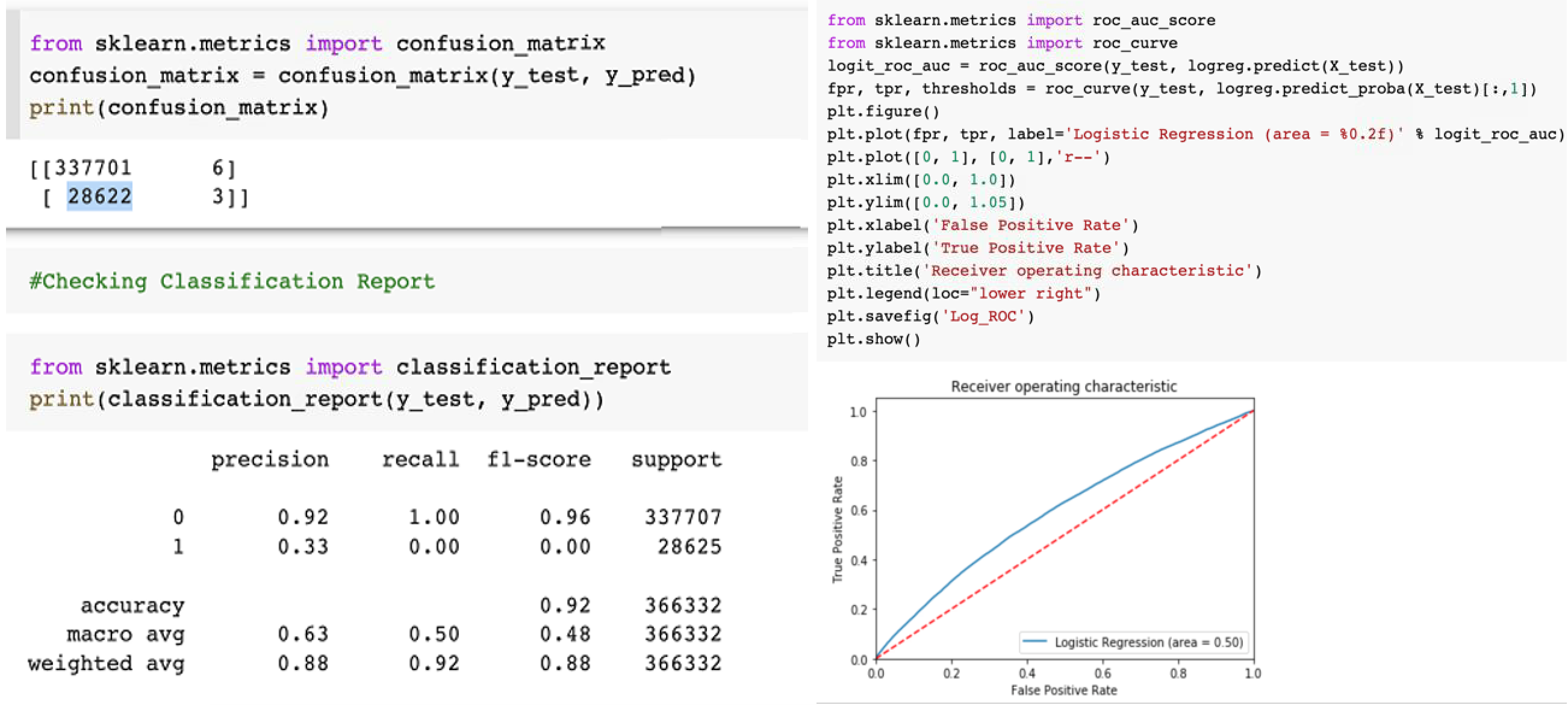

A metric used for model evaluation that measures the number of correct predictions made by the model over all kinds of predictions. Useful for classification tasks like sentiment analysis.

~ the percentage of samples correctly classified given a labelled (but possibly biased) dataset. Consider a classification task in which a machine learning system observes tumors and must predict whether they are malignant or benign. Accuracy, or the fraction of instances that were classified correctly, is an intuitive measure of the program's performance. While accuracy does measure the program's performance, it does not differentiate between malignant tumors that were classified as being benign, and benign tumors that were classified as being malignant. In some applications, the costs associated with all types of errors may be the same. In this problem, however, failing to identify malignant tumors is likely a more severe error than mistakenly classifying benign tumors as being malignant.

TP + TN

Accuracy = -------------------

TP + TN + FP + FN

T = Correctly identified

F = Incorrectly identified

P = Actual value is positive (class A, a cat)

F = Actual value is negative (class B, not a cat, a dog)

TP = True positive (correctly identified as class A)

TN = True negative (correctly identified as class B)

FP = False Positive

FN = False negative

TP + TN + FP + FN = all experiments/classifications/samples

More at:

See also A, Confusion Matrix

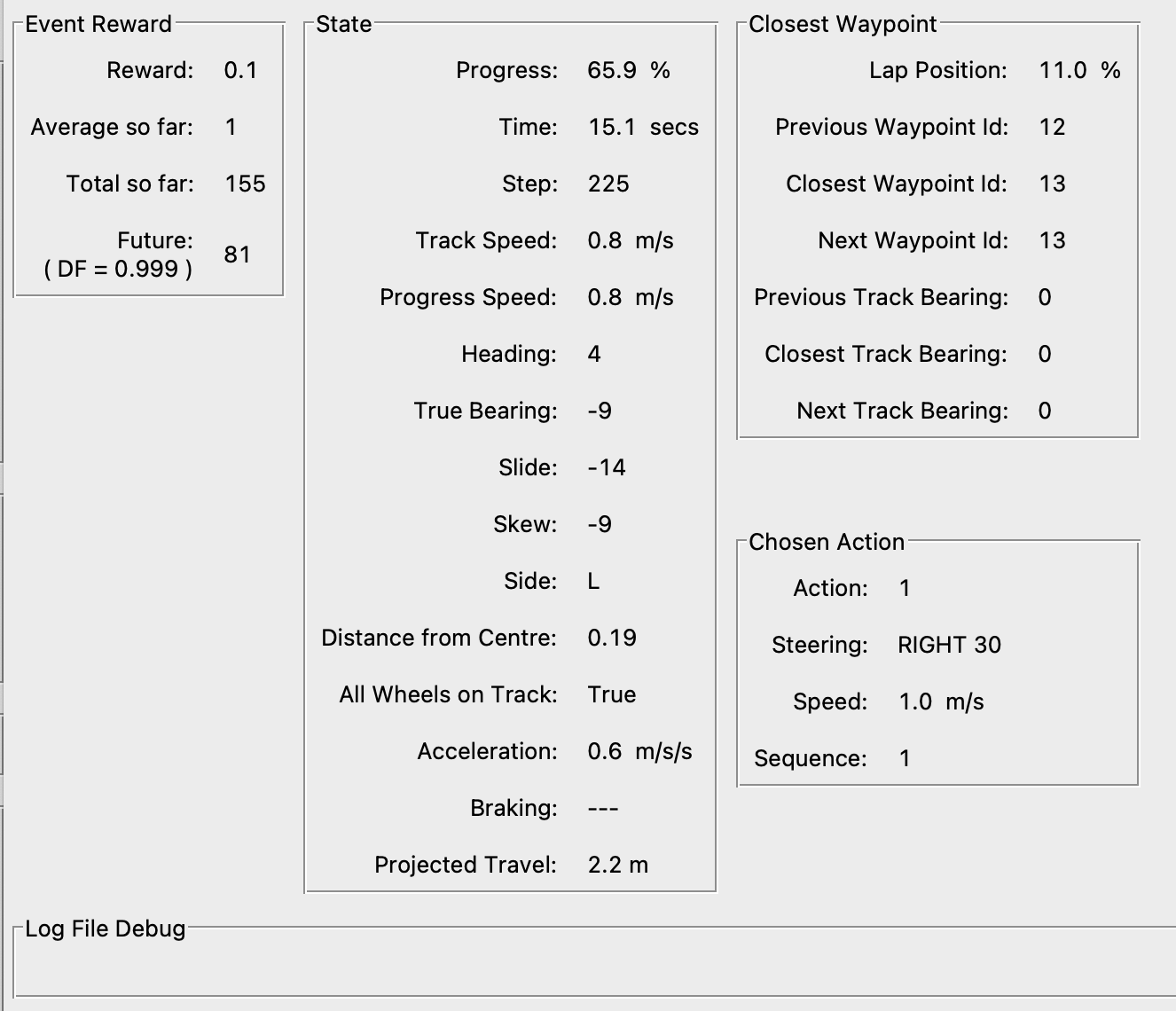

Action#

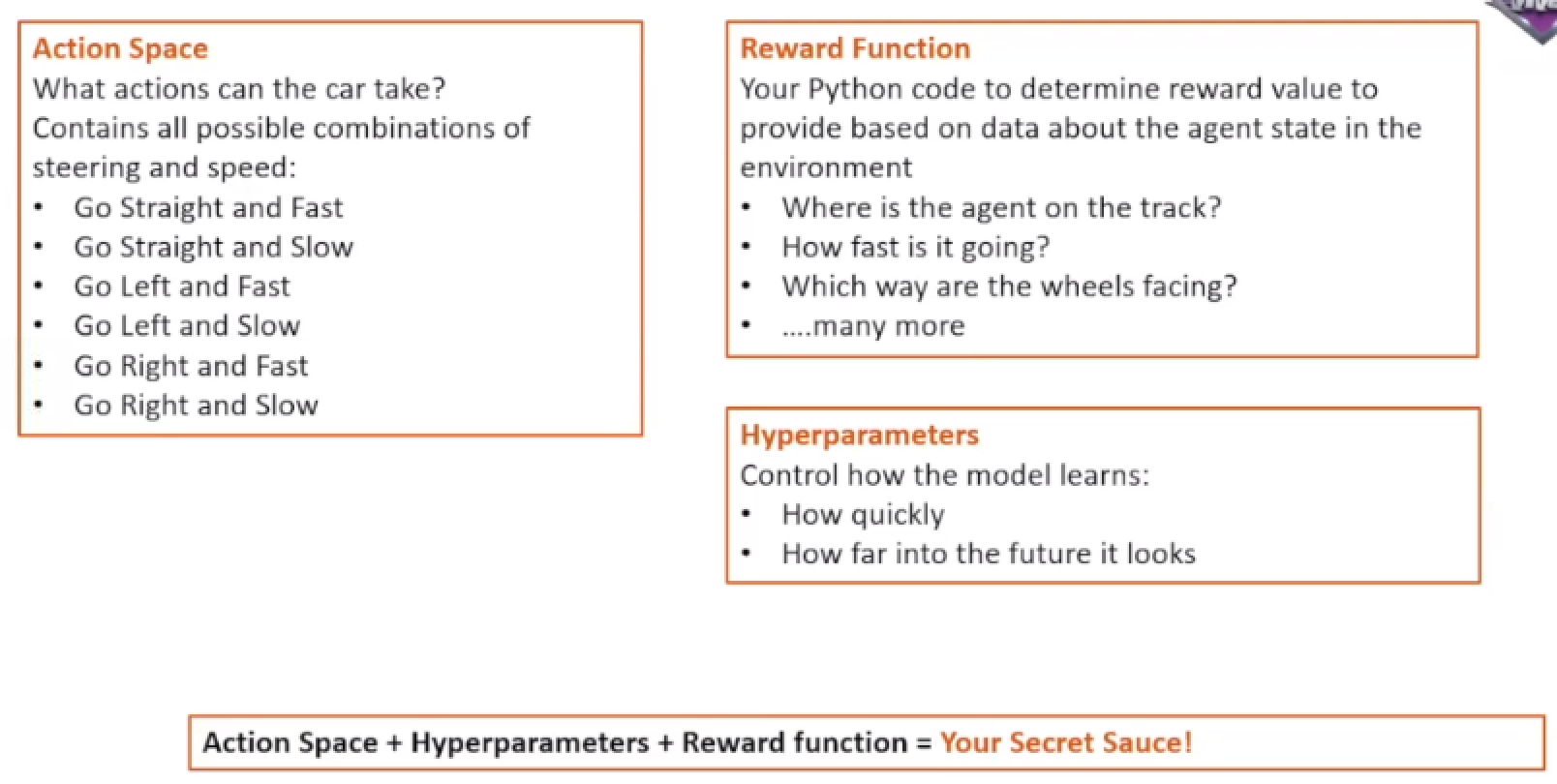

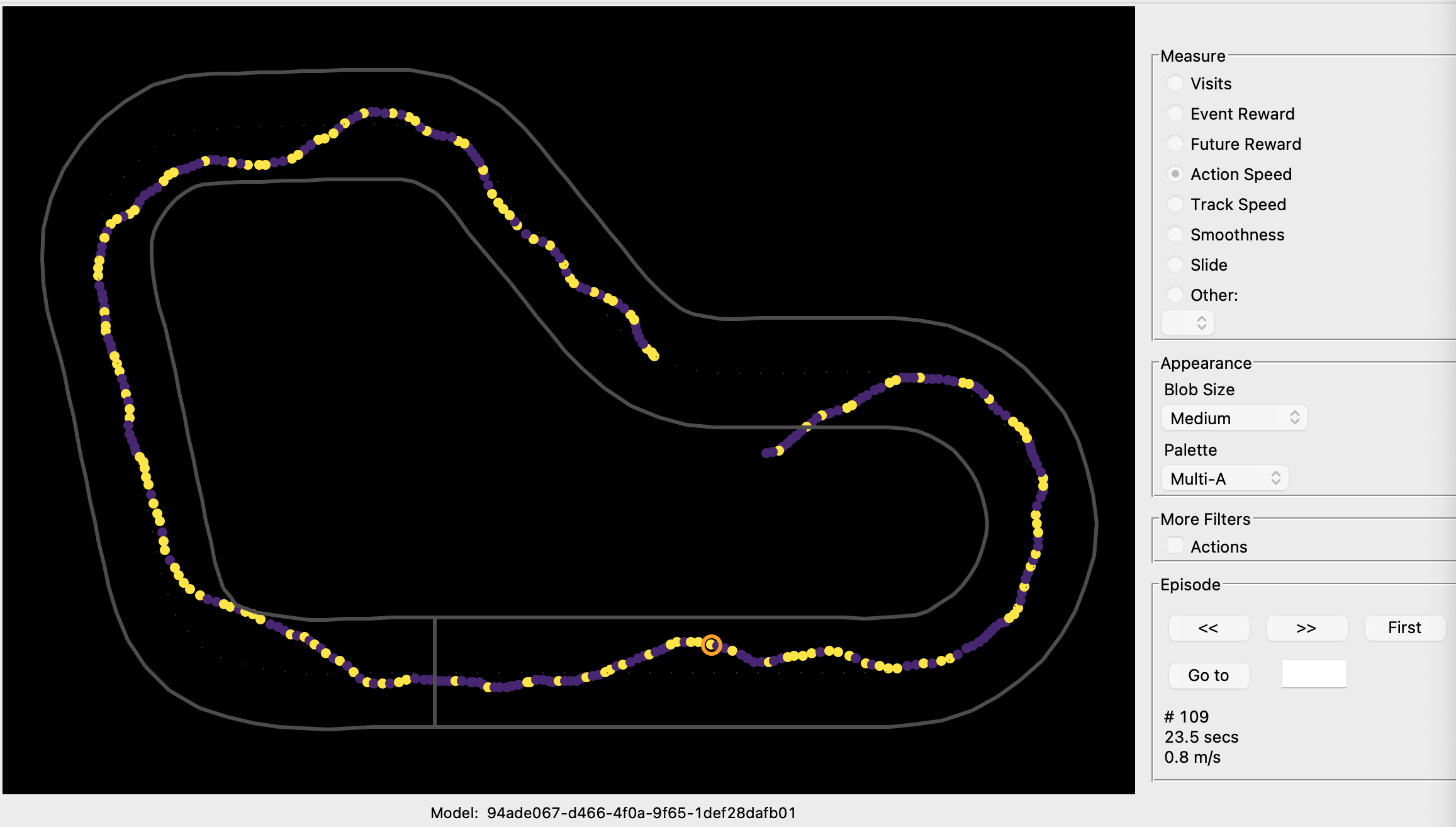

In Reinforcement Learning, an action is a move made by the agent in the current state. For AWS DeepRacer, an action corresponds to a move at a particular speed (throttle) and steering angle. With AWS DeepRacer, there is an immediate reward associated with any action.

See also A, Action Space

Action Space#

In Reinforcement Learning, represents a set of actions.

- Discrete action space - We can individually define each action. In the discrete action space setting, limiting an agent's choices to a finite number of predefined actions puts the onus on you to understand the impact of these actions and define them based on the environment (track, racing format) and your reward functions.

- Continuous action space -

This lists out all of what the agent can actually do at each timestep virtually or physically.

- Speed between 0.5 and 1 m/s

- Steering angle -30 to 30 deg

Action Transformer#

At Adept, we are building the next frontier of models that can take actions in the digital world—that’s why we’re excited to introduce our first large model, Action Transformer (ACT-1).

Why are we so excited about this?

First, we believe the clearest framing of general intelligence is a system that can do anything a human can do in front of a computer. A foundation model for actions, trained to use every software tool, API, and webapp that exists, is a practical path to this ambitious goal, and ACT-1 is our first step in this direction.

“Adept’s technology sounds plausible in theory, [but] talking about Transformers needing to be ‘able to act’ feels a bit like misdirection to me,” Mike Cook, an AI researcher at the Knives & Paintbrushes research collective, which is unaffiliated with Adept, told TechCrunch via email. “Transformers are designed to predict the next items in a sequence of things, that’s all. To a Transformer, it doesn’t make any difference whether that prediction is a letter in some text, a pixel in an image, or an API call in a bit of code. So this innovation doesn’t feel any more likely to lead to artificial general intelligence than anything else, but it might produce an AI that is better suited to assisting in simple tasks.”“Adept’s technology sounds plausible in theory, [but] talking about Transformers needing to be ‘able to act’ feels a bit like misdirection to me,” Mike Cook, an AI researcher at the Knives & Paintbrushes research collective, which is unaffiliated with Adept, told TechCrunch via email. “Transformers are designed to predict the next items in a sequence of things, that’s all. To a Transformer, it doesn’t make any difference whether that prediction is a letter in some text, a pixel in an image, or an API call in a bit of code. So this innovation doesn’t feel any more likely to lead to artificial general intelligence than anything else, but it might produce an AI that is better suited to assisting in simple tasks.”

# https://techcrunch.com/2022/04/26/2304039/

See also A, Reinforcement Learning, Transformer Architecture

Action-Value Function#

This tells us how good it is for the agent to take any given action from a given state while following the policy. In other words, it gives us the value of an action under policy (pi). The [state-value] function tells us how good any given state is for the RL agent, whereas the action-value function tells us how good it is for the RL agent to take any action from a given state.

Qpi(s,a) = E [ sum(0,oo, gamma*R | St=s, At=a]

# St state at a given timestep

# At action at a given timestep

See also A, Bellman Equation, Time Step

Activation Atlas#

Image used to walk through the embedding space of the model

More at:

- article - https://openai.com/index/introducing-activation-atlases/

- simulation + paper - https://distill.pub/2019/activation-atlas/

Activation Checkpointing#

~ one of the classic tradeoffs in computer science—between memory and compute.

~ active only during training?

Activation checkpointing is a technique used in deep learning models to reduce memory consumption during the backpropagation process, particularly in recurrent neural networks (RNNs) and transformers. It allows for training models with longer sequences or larger batch sizes without running into memory limitations.

During the forward pass of a deep learning model, activations (intermediate outputs) are computed and stored for each layer. These activations are required for computing gradients during the backward pass or backpropagation, which is necessary for updating the model parameters.

In activation checkpointing, instead of storing all activations for the entire sequence or batch, only a subset of activations is saved. The rest of the activations are recomputed during the backward pass as needed. By selectively recomputing activations, the memory requirements can be significantly reduced, as only a fraction of the activations need to be stored in memory at any given time.

The process of activation checkpointing involves dividing the computational graph into segments or checkpoints. During the forward pass, the model computes and stores the activations at the checkpoints. During the backward pass, the gradients are calculated using the stored activations, and the remaining activations are recomputed as necessary, using the saved memory from recomputed activations.

Activation checkpointing is an effective technique for mitigating memory limitations in deep learning models, particularly in scenarios where memory constraints are a bottleneck for training large-scale models with long sequences or large batch sizes. It helps strike a balance between memory consumption and computational efficiency, enabling the training of more memory-intensive models.

More at:

See also A, [Zero Redundancy Optimization]

Activation Function#

Activation functions are required to include non-linearity in the artificial neural network .

Without activation functions, in a multi-layered neural network the Decision Boundary stays a line regardless of the weight and bias settings of each artificial neuron!

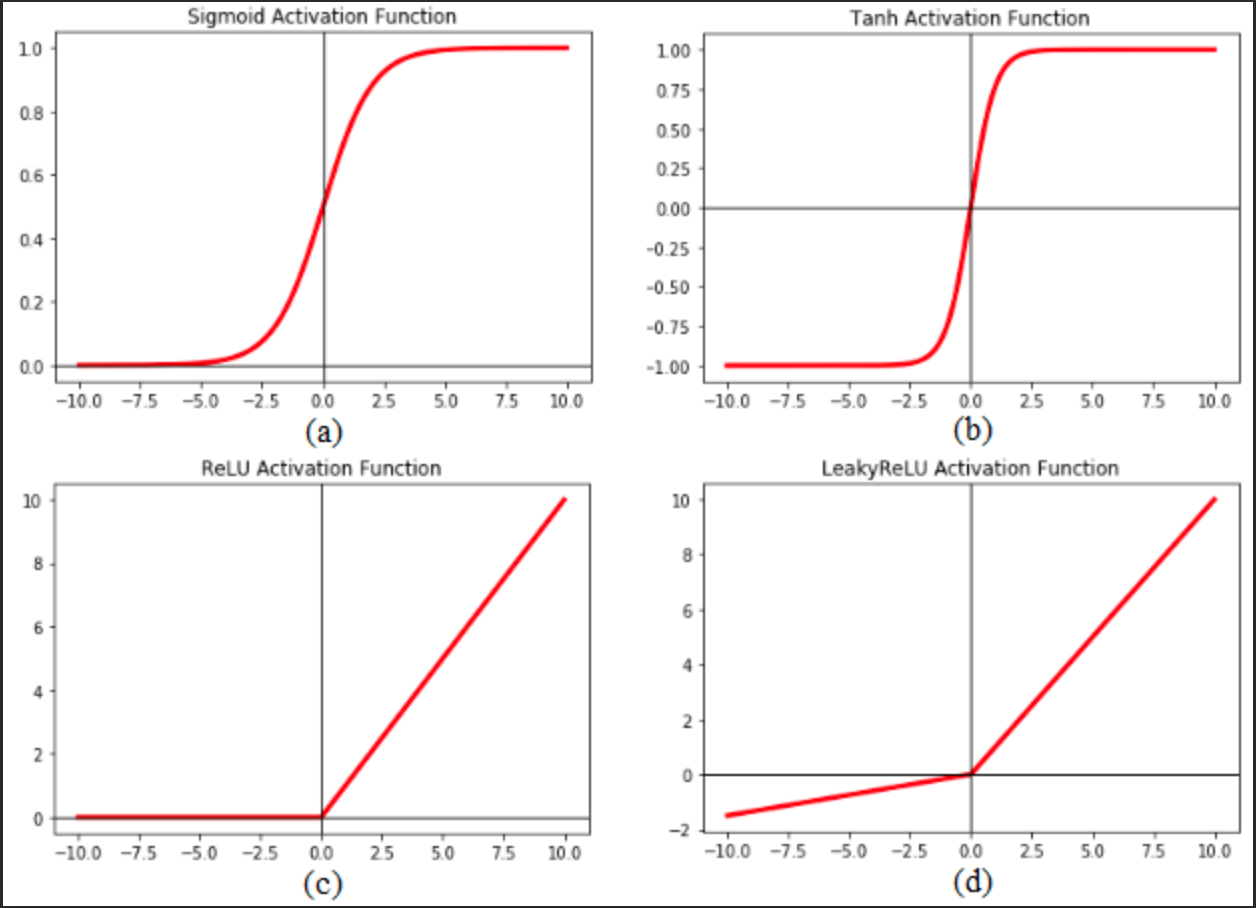

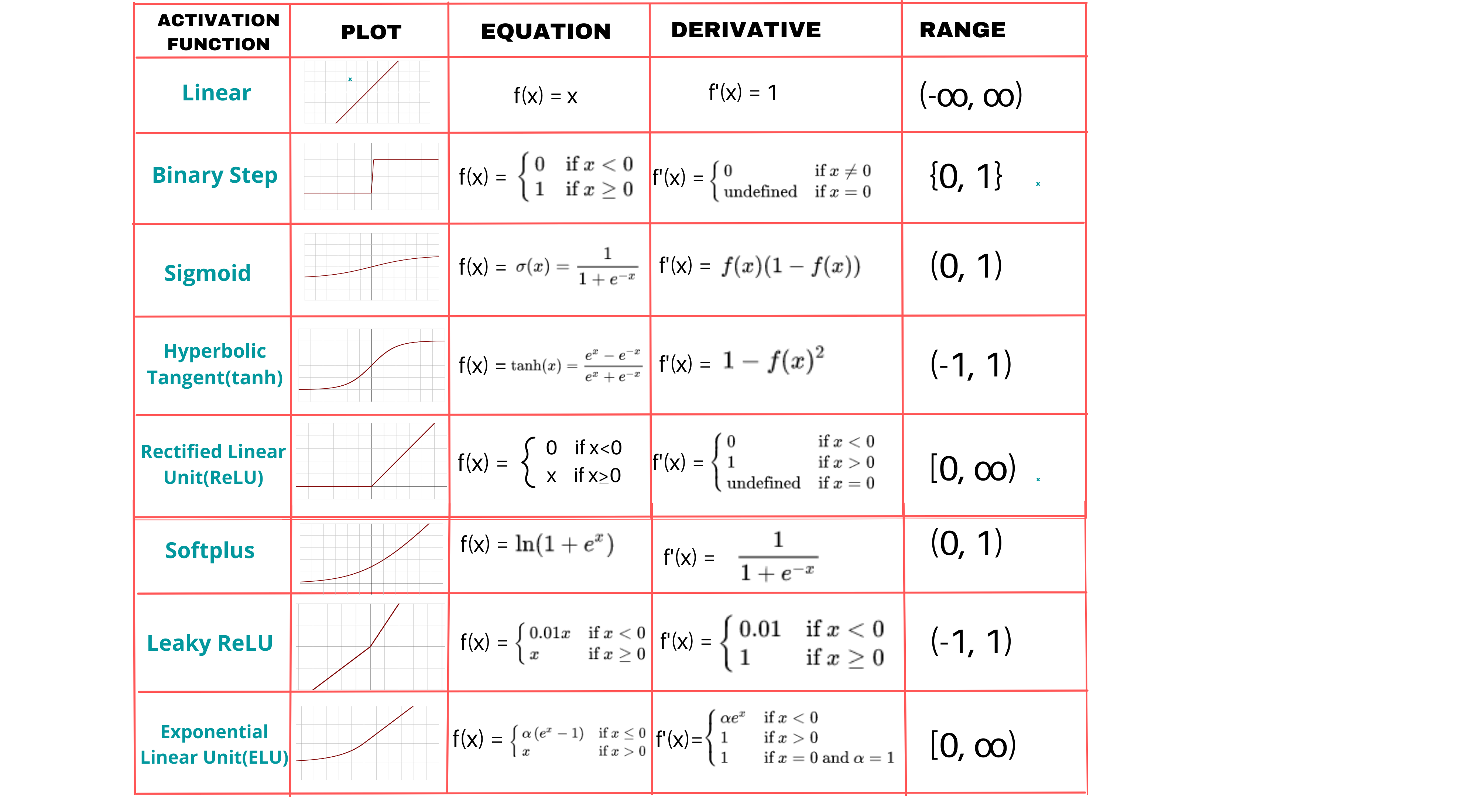

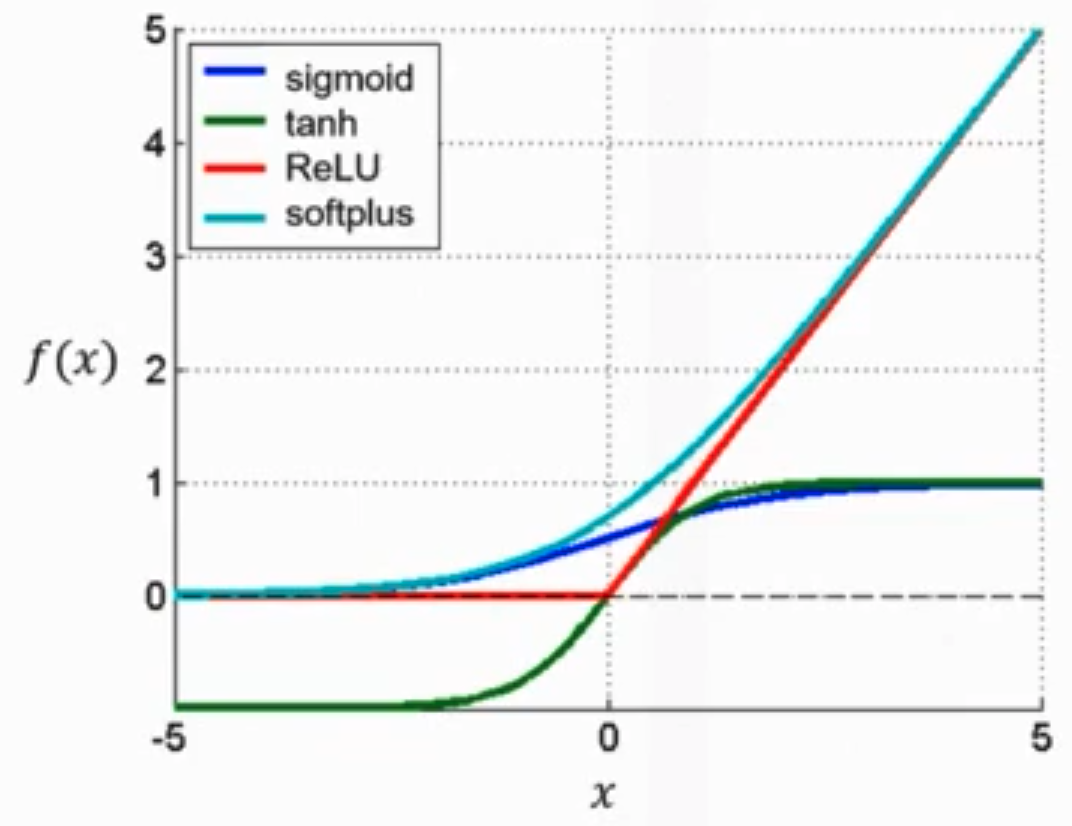

There are several activation functions used in the fields. They are:

- Rectified Linear Unit (ReLU) function

- LeakyReLU function

- Tanh function,

- Sigmoid function : Sigmoid is great to keep a probability between 0 and 1, even if sample is an outlier based on the sample. (Ex: how long to slow down for a car, or will I hit the tree given the distance, but here car goes at 500km/h = an outlier)

- Softplus function

- Step activation

- Gaussian Error Linear Unit (GELU) function

- Exponential Linear Unit (ELU) function

- Linear function

A crucial element within artificial neural networks, responsible for modifying input signals. This function adjusts the output magnitude based on the input magnitude: Inputs over a predetermined threshold result in larger outputs. It acts like a gate that selectively permits values over a certain point.

Warning ...

for multi-layer neural networks that use of an activation function at each layer, the backpropagation computation leads to loss of information (forward for input and backward for weight computation) which is known as the vanishing gradient problem.

See also A, Batch Normalization, Exploding Gradient Problem, [Gradient Descent Algorithm], Loss Function

Activation Layer#

Activation layers are an important component in convolutional neural networks (CNNs). Here's an explanation of what they are and why they are used:

The purpose of activation layers in a CNN is to introduce nonlinearity. Most of the layers in a CNN perform linear operations - for example, convolution layers apply a dot product between the input and a filter, and pooling layers downsample using an algorithm like max or average.

These linear operations alone don't allow the CNN to learn complex patterns. Without nonlinearity, no matter how many layers you stack, the output would still be a linear function of the input.

Activation layers apply a nonlinear function to the output from the previous layer. This allows the CNN to learn and model complex nonlinear relationships between the inputs and outputs.

Some common activation functions used are:

- ReLU (Rectified Linear Unit): ReLU applies the function f(x) = max(0, x). It thresholds all negative values to 0.

- Leaky ReLU: A variant of ReLU that gives a small negative slope (e.g. 0.01) to negative values rather than thresholding at 0.

- Tanh: Applies the hyperbolic tangent function to squash values between -1 and 1.

- Sigmoid: Applies the logistic sigmoid function to squash values between 0 and 1.

In practice, ReLU is by far the most common activation function used in CNNs. The nonlinearity it provides enables CNNs to learn very complex features and patterns in image and video data.

The key takeaway is that activation layers introduce nonlinearity which allows CNNs to learn and model complex relationships between inputs and outputs. Choosing the right activation function is important for enabling efficient training and generalization.

See also A, ...

Activation Map#

The activation map is the result of the application of the activation function on a feature map in a CNN.

See also A, ...

Activation Step#

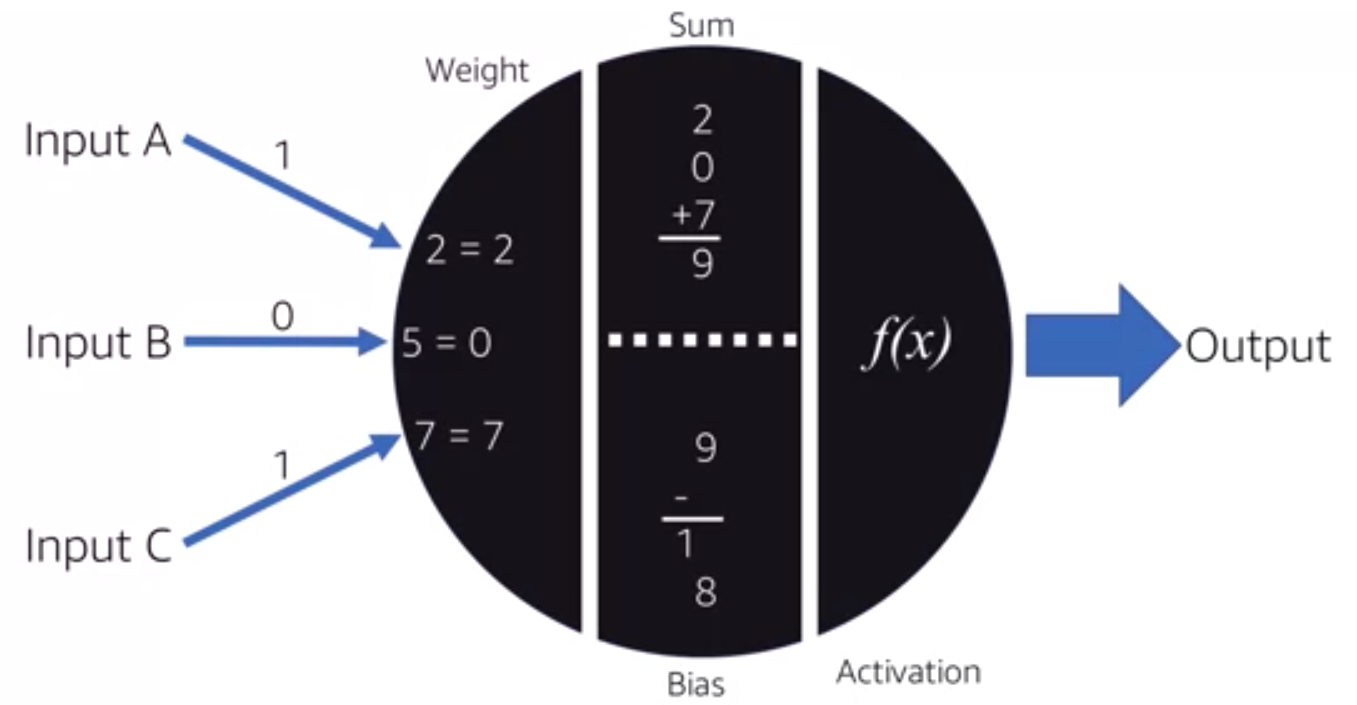

Last step in an artificial neuron before an output is generated.

See also A, ...

Active Learning#

~ Pick the sample from which you will learn the most and have them labelled. How to select those samples? But a model with a seed sample set, run data to the model, label the ones that have the most uncertainty.

A form of reinforcement learning from human feedback (RLHF) where an algorithm actively engages with a user to obtain labels for data. It refines its performance by getting labels for desired outputs.

More at:

See also A, Bayesian Optimization Sampling Method, Passive Learning

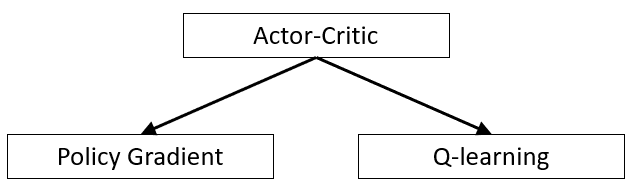

Actor#

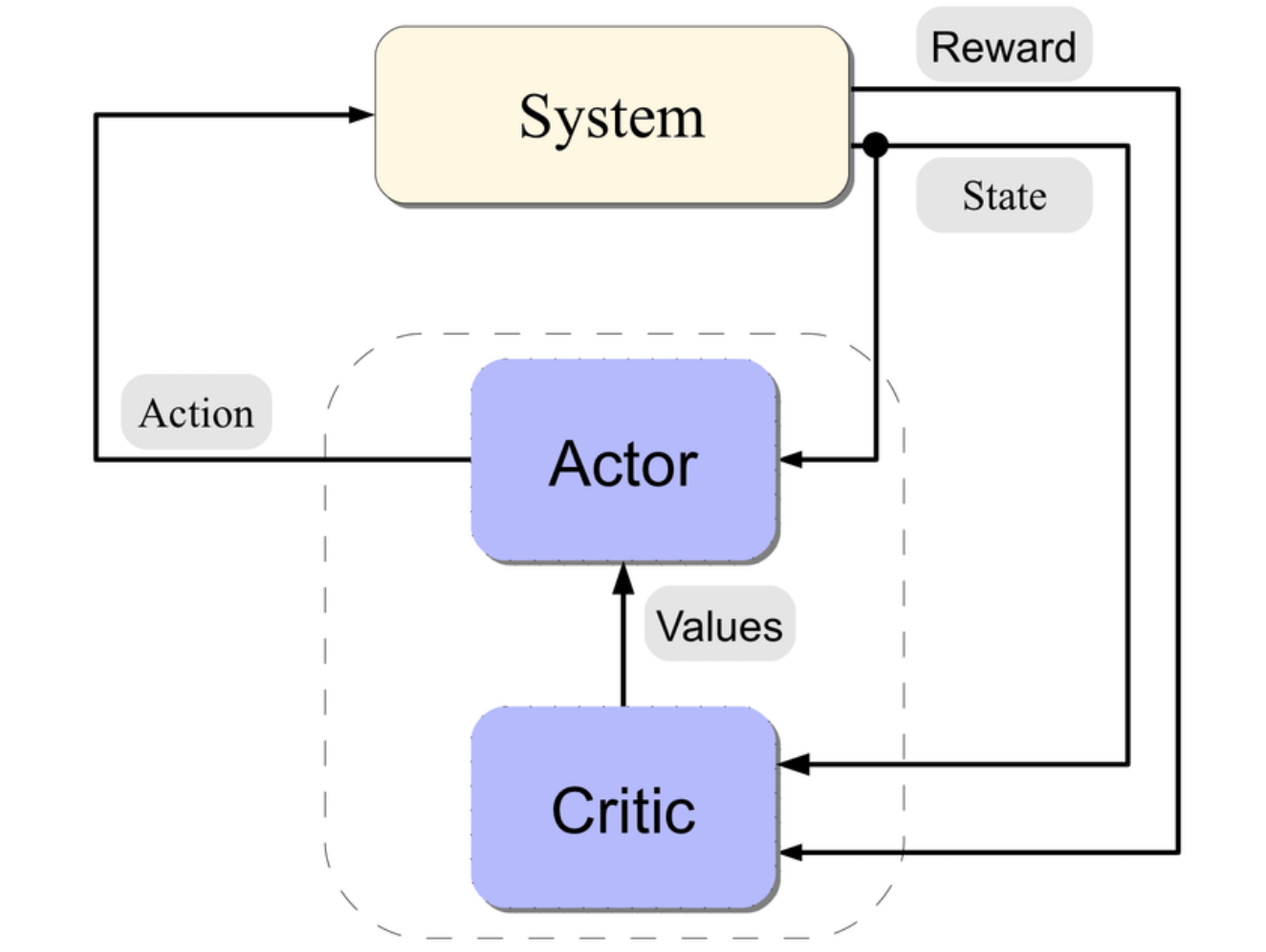

In reinforcement learning, when using an actor-critic algorithm, an actor is a Policy Gradient algorithm that decides on an action to take.

Actor Network#

See also A, ...

Actor-Critic Algorithm#

When you put actor and critic together!

A two-part algorithmic structure employed in reinforcement learning (RL). Within this model, the “Actor” determines optimal actions based on the state of its environment. At the same time, the “Critic” evaluates the quality of state-action pairs, improving them over time.

Variations:

See also A, [Model-Free Learning Algorithm]

Actor-Critic Architecture#

To use an analogy, an actor=child playing in a environment=playground and watched by a critic=parent!

The critic outputs feedback = an action score!

The actor-critic architecture combines the critic network with an actor network, which is responsible for selecting actions based on the current state. The critic network provides feedback to the actor network by estimating the quality of the selected actions or the value of the current state. This feedback is then used to update the actor network's policy parameters, guiding it towards actions that are expected to maximize the cumulative reward.

This architecture works, because of the derivative chain rule

See also A, ...

Actor-Critic With Experience Replay (ACER) Algorithm#

A sample-efficient policy gradient algorithm. ACER makes use of a replay buffer, enabling it to perform more than one gradient update using each piece of sampled experience, as well as a Q-Function approximate trained with the Retrace algorithm.

See also A, PPO Algorithm, Reinforcement Learning, SAC Algorithm

Adapter Layer#

Add new intermediate module

More at:

- site - https://research.google/pubs/parameter-efficient-transfer-learning-for-nlp/

- code - https://github.com/google-research/adapter-bert

- paper - https://arxiv.org/abs/1902.00751

See also A, ...

Adaptive Boosting (AdaBoost)#

- AdaBoost combines a lot of "weak learners" to make classifications. The weak learners are almost always decision stumps.

- Some stumps get more say (weight) in the classification than others

- Each stump is made by taking the previous stump's mistakes into account

More at:

- example - https://www.analyticsvidhya.com/blog/2021/09/adaboost-algorithm-a-complete-guide-for-beginners/

- wikipedia - https://en.wikipedia.org/wiki/AdaBoost

- https://towardsdatascience.com/understanding-adaboost-2f94f22d5bfe

See also A, Boosting, Decision Stump, Forest Of Stumps

Adaptive Delta (AdaDelta) Algorithm#

AdaDelta is an optimization algorithm for [gradient descent], which is commonly used in [machine learning] and [deep learning]. It was introduced by Matthew Zeiler in 2012 as an extension of the AdaGrad algorithm.

The key idea behind AdaDelta is to adaptively adjust the learning rate for each parameter based on the historical gradients of that parameter. Unlike AdaGrad, which accumulates the square of the gradients over all time, AdaDelta restricts the accumulation to a fixed window of the most recent gradients.

AdaDelta stands for "Adaptive Delta". The "Delta" part of the name refers to the parameter updates, which are represented by the variable delta in the update rule. The "Adaptive" part of the name refers to the fact that the learning rate is adaptively adjusted for each parameter based on the historical gradients of that parameter.

More at:

- https://paperswithcode.com/method/adadelta

- paper - https://arxiv.org/abs/1212.5701v1

- code - https://github.com/pytorch/pytorch/blob/b7bda236d18815052378c88081f64935427d7716/torch/optim/adadelta.py#L6

- from scratch - https://machinelearningmastery.com/gradient-descent-with-adadelta-from-scratch/

See also A, Adaptive Gradient Algorithm

Adaptive Learning#

Adaptive learning is a way of delivering learning experiences that are customized to the unique needs and performance of each individual. It can use computer software, online systems, algorithms, and artificial intelligence to provide feedback, pathways, resources, and materials that are most effective for each learner. Adaptive learning can vary depending on the content, the learner, and the network of other learners.

See also A, Learning Method

Adaptive Learning Algorithm#

In [Gradient Descent] and [Gradient Descent with Momentum], we saw how learning rate affects the convergence. Setting the learning rate too high can cause oscillations around minima and setting it too low, slows the convergence. Learning Rate in Gradient Descent and its variations like Momentum is a hyper-parameter which needs to be tuned manually for all the features.

With those algorithms, when we try updating weights in a neural net * Learning rate is the same for all the features * Learning rate is the same at all the places in the cost space

With adaptive learning algorithm, the learning rate is not constant and changes based on the feature and the location

Algorithm with adaptive learning rates are:

- Adam

- AdaGrad

- Root Mean Square Propagation (RMSprop)

- AdaDelta

- and more ...

See also A, ...

Adaptive Gradient (AdaGrad) Algorithm#

~ optimization algorithm that use different learning rate for each parameter/weight/feature

- great when input variables are dense features and sparse features (lots of 0)

Unfortunately, this hyper-parameter could be very difficult to set because if we set it too small, then the parameter update will be very slow and it will take very long time to achieve an acceptable loss. Otherwise, if we set it too large, then the parameter will move all over the function and may never achieve acceptable loss at all. To make things worse, the high-dimensional non-convex nature of neural networks optimization could lead to different sensitivity on each dimension. The learning rate could be too small in some dimension and could be too large in another dimension.

One obvious way to mitigate that problem is to choose different learning rate for each dimension, but imagine if we have thousands or millions of dimensions, which is normal for deep neural networks, that would not be practical. So, in practice, one of the earlier algorithms that have been used to mitigate this problem for deep neural networks is the AdaGrad algorithm (Duchi et al., 2011). This algorithm adaptively scaled the learning rate for each dimension.

Adagrads most significant benefit is that it eliminates the need to tune the learning rate manually, but it still isn't perfect. Its main weakness is that it accumulates the squared gradients in the denominator. Since all the squared terms are positive, the accumulated sum keeps on growing during training. Therefore the learning rate keeps shrinking as the training continues, and it eventually becomes infinitely small. Other algorithms like Adadelta, RMSprop, and Adam try to resolve this flaw.

More at:

- https://medium.com/konvergen/an-introduction-to-adagrad-f130ae871827

- https://ml-explained.com/blog/adagrad-explained

See also A, ...

Adaptive Learning Rate#

See also A, ...

Adaptive Moment (Adam) Estimation Algorithm#

Adam (Adaptive Moment Estimation) is an optimization algorithm used in machine learning to update the weights of a neural network during training. It is an extension of stochastic gradient descent (SGD) that incorporates ideas from both momentum-based methods and adaptive learning rate methods.

The main idea behind Adam is to adjust the learning rate for each weight based on the gradient's estimated first and second moments. The first moment is the mean of the gradient, and the second moment is the variance of the gradient. Adam maintains an exponentially decaying average of the past gradients, similar to the momentum method, and also an exponentially decaying average of the past squared gradients, similar to the adaptive learning rate methods. These two estimates are used to update the weights of the network during training.

Compared to Stochastic Gradient Descent (SGD), Adam can converge faster and requires less hyperparameter tuning. It adapts the learning rate on a per-parameter basis, which helps it to converge faster and avoid getting stuck in local minima. It also uses momentum to accelerate the convergence process, which helps the algorithm to smooth out the gradient updates, resulting in a more stable convergence process. Furthermore, Adam uses an adaptive learning rate, which can lead to better convergence on complex, high-dimensional problems.

More at:

- https://medium.com/geekculture/a-2021-guide-to-improving-cnns-optimizers-adam-vs-sgd-495848ac6008

- https://medium.com/@Biboswan98/optim-adam-vs-optim-sgd-lets-dive-in-8dbf1890fbdc

See also A, ...

Addiction#

More at:

See also A, Delayed Reward, Reinforcement Learning, Reward Shaping

Adept AI Company#

Unicorn startup company Adept is working on a digital assistant that can do all your clicking, searching, typing and scrolling for you. Its AI model aims to convert a simple text command (like “find a home in my budget” or “create a profit and loss statement”) into actual actions carried out by your computer without you having to lift a finger. Adept has announced $415 million in total funding and is backed by strategic investors like Microsoft and Nvidia. CEO David Luan cofounded the startup with Ashish Vaswani and Niki Parmar, former Google Brain scientists, who invented a major AI breakthrough called the transformer (that’s the T in ChatGPT). The latter two departed the company in 2022, but six other founding team members — Augustus Odena, Max Nye, Fred Bertsch, Erich Elsen, Anmol Gulati, and Kelsey Szot — remain.

The company is claiming to be building an action transformer model

More at:

- site - https://www.adept.ai/

- crunchbase - https://www.crunchbase.com/organization/adept-48e7

- articles

See also A, ...

Adjusted R-Square#

The adjusted R-squared (R̅^2) is a modified version of the [R-squared] that has been adjusted for the number of [predictors] in the model. The adjusted R-squared increases only if the new term improves the model more than would be expected by chance.

It is calculated as:

R̅^2 = 1 - (1 - R^2)*(n-1)/(n-k-1)

Where:

n is the sample size

k is the number of predictors (not counting the intercept)

The adjusted R-squared:

- Allows for the degrees of freedom associated with the sums of the squares.

- Decreases when an insignificant predictor variable is added to the model.

- Helps choose the better model in a regression with many [predictor] variables.

Unlike R^2, the adjusted R̅^2 will not artificially inflate as more variables are included. It only increases if the new variable improves the model's ability to explain the response variable.

The adjusted R-squared can be negative, and will be lower than the regular R-squared. Negative values indicate that the regression model is a poor fit, worse than just using the mean of the dependent variable.

Overall, the adjusted R-squared attempts to make a fair comparison between models with different numbers of predictor variables. It is generally preferred over R^2 when evaluating regression models.

See also A, ...

Adobe Company#

- Firefly - text to image generator

More at:

- principles - https://www.adobe.com/about-adobe/aiethics.html

See also A, ...

Adobe Firefly Product#

Experiment, imagine, and make an infinite range of creations with Firefly, a family of creative generative AI models coming to Adobe products.

More at:

See also A, ...

Advantage Actor-Critic (A2C) Algorithm#

A2C, or Advantage Actor-Critic, is a synchronous version of the A3C policy gradient method. As an alternative to the asynchronous implementation of A3C, A2C is a synchronous, deterministic implementation that waits for each actor to finish its segment of experience before updating, averaging over all of the actors. This more effectively uses GPUs due to larger [batch sizes].

An advanced fusion of [policy gradient] and learned value function within reinforcement learning (RL). This hybrid algorithm is characterized by two interdependent components the “Actor,” which learns a parameterized policy, and the “Critic,” which assimilates a value function for the evaluation of state-action pairs. These components collectively contribute to a refined learning process.

More at:

- paper - https://arxiv.org/abs/1602.01783v2

- code - https://paperswithcode.com/paper/asynchronous-methods-for-deep-reinforcement#code

- articles

See also A, ...

Advanced Micro Devices (AMD) Company#

A company that build, design, and sells GPUs

See also A, ...

Advantage Estimation#

See also A, ...

Adversarial Attack#

In the last few years researchers have found many ways to break AIs trained using labeled data, known as supervised learning. Tiny tweaks to an AI’s input (a.k.a. Adversarial Example) — such as changing a few pixels in an image—can completely flummox it, making it identify a picture of a sloth as a race car, for example. These so-called adversarial attacks have no sure fix.

In 2017, Sandy Huang, who is now at DeepMind, and her colleagues looked at an AI trained via reinforcement learning to play the classic video game Pong. They showed that adding a single rogue pixel to frames of video input would reliably make it lose. Now Adam Gleave at the University of California, Berkeley, has taken adversarial attacks to another level.

An attempt to damage a [machine learning] model by giving it misleading or deceptive data during its training phase, or later exposing it to maliciously-engineered data, with the intent to induce degrade or manipulate the model’s output.

See also A, Adversarial Policy, [Threat Model]

Adversarial Example#

The building blocks of an adversarial attack: Inputs deliberately constructed to provoke errors in machine learning models. These are typically deviations from valid inputs included in the data set that involve subtle alterations that an attacker introduces to exploit vulnerabilities in the model.

See also A, ...

Adversarial Imitation Learning#

See also A, [Imitation Learning]

Adversarial Model#

See also A, State Model

Adversarial Policy#

By fooling an AI into seeing something that isn’t really there, you can change how things around it act. In other words, an AI trained using reinforcement learning can be tricked by weird behavior. Gleave and his colleagues call this an adversarial policy. It’s a previously unrecognized threat model, says Gleave.

See also A, Adversarial Attack, [Threat Model]

Affective Computing#

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science, psychology, and cognitive science. While some core ideas in the field may be traced as far back as to early philosophical inquiries into emotion, the more modern branch of computer science originated with Rosalind Picard's 1995 paper on affective computing and her book Affective Computing published by MIT Press. One of the motivations for the research is the ability to give machines Emotional Intelligence, including to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response to those emotions.

More at:

See also A, ...

Agency#

~ having control over your action (free will)

Agency refers to the capacity for human beings to make choices and take actions that affect the world. It is closely related to the concept of free will - the ability to make decisions and choices independently. Some key aspects of agency include:

- Autonomy - Being able to act according to one's own motivations and values, independently of outside influence or control. This involves having the freedom and capacity to make one's own choices.

- Self-efficacy - Having the belief in one's own ability to accomplish goals and bring about desired outcomes through one's actions. This contributes to a sense of control over one's life.

- Intentionality - Acting with intention and purpose rather than just reacting instinctively. Agency involves making deliberate choices to act in certain ways.

- Self-determination - Being able to shape one's own life circumstances rather than being completely shaped by external factors. Exercising agency is about asserting control within the possibilities available to each individual.

- Moral accountability - Being responsible for and willing to accept the consequences of one's actions. Agency implies being answerable for the ethical and social impacts of one's choices.

So in summary, agency is the ability people have to make free choices and take purposeful action that directs the course of their lives and the world around them. It is central to human experience and identity.

More at:

- wikipedia - https://en.wikipedia.org/wiki/Agency_(sociology)

Agent#

~ As agency and autonomy

A person, an animal, or a program that is free to make a decision or take an action. An agent has a purpose and a goal.

Type of agents:

- Humans

- Animals

- AI Agents

- ...

- Embodied Agents

See also A, ...

Agent-Based Modeling#

A method employed for simulating intricate systems, focusing on interactions between individual [agents] to glean insights into emergent system behaviors.

See also A, ...

Agent Registry#

- Web agent

- Contextual Search Agent

- API Agent

- Text/Image Analysis Agent

- Data Science Agent

- Compliance Agent

- etc.

See also A, AI Agent, [Agent SDK]

Agent Software Development Kit (Agent SDK)#

See also A, Agent Registry

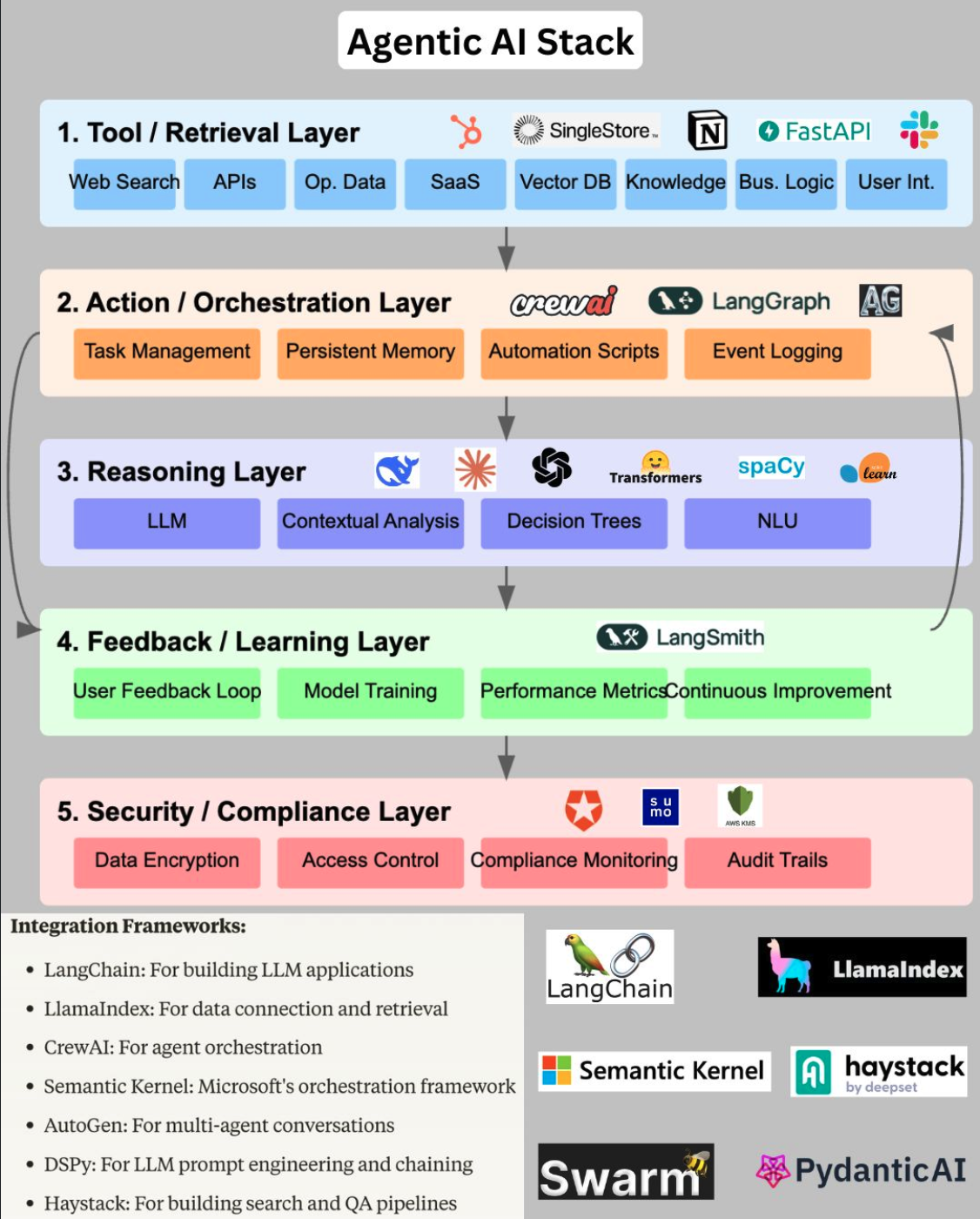

Agentic AI Stack#

An Agentic AI Stack is essential for creating intelligent, autonomous systems capable of handling complex tasks and making informed decisions.

The Agentic AI Stack shown below is a comprehensive framework designed to enable autonomous decision-making and task execution within AI-driven systems. It consists of multiple layers, each serving a distinct function to support the system's operations.

At the foundation is the Tool/Retrieval Layer, which handles information gathering through web searches, APIs, operational databases, and SaaS platforms. It also includes vector databases, knowledge bases, business logic, and user interaction interfaces, ensuring the system can access and utilize diverse data sources effectively.

The Action/Orchestration Layer manages task execution with components like task management systems, persistent memory, automation scripts, and event logging, enabling the system to perform actions autonomously and maintain operational records.

Central to the stack is the Reasoning Layer, powered by Large Language Models (LLMs) and supported by contextual analysis tools, decision trees, and natural language understanding (NLU) components. This layer provides the cognitive capabilities necessary for understanding and generating human-like text.

The Feedback/Learning Layer focuses on continuous improvement through user feedback collection, model training, and performance monitoring, ensuring the system adapts and improves over time.

Finally, the Security/Compliance Layer ensures secure and compliant operations with data encryption, access control, compliance monitoring, and audit trails.

Together, these layers form a robust Agentic AI Stack, facilitating autonomous, adaptive, and secure AI system operations.

[Note: These are not the standard set of tools to be used. The tools and frameworks can change according to the use case]

See also A, ...

Agentic RAG#

~ a type of RAG

More at: * https://github.com/asinghcsu/AgenticRAG-Survey

See also A, ...

Agentic Workflow#

~ Smart workflow with AI

Function calls that work

See also A, ...

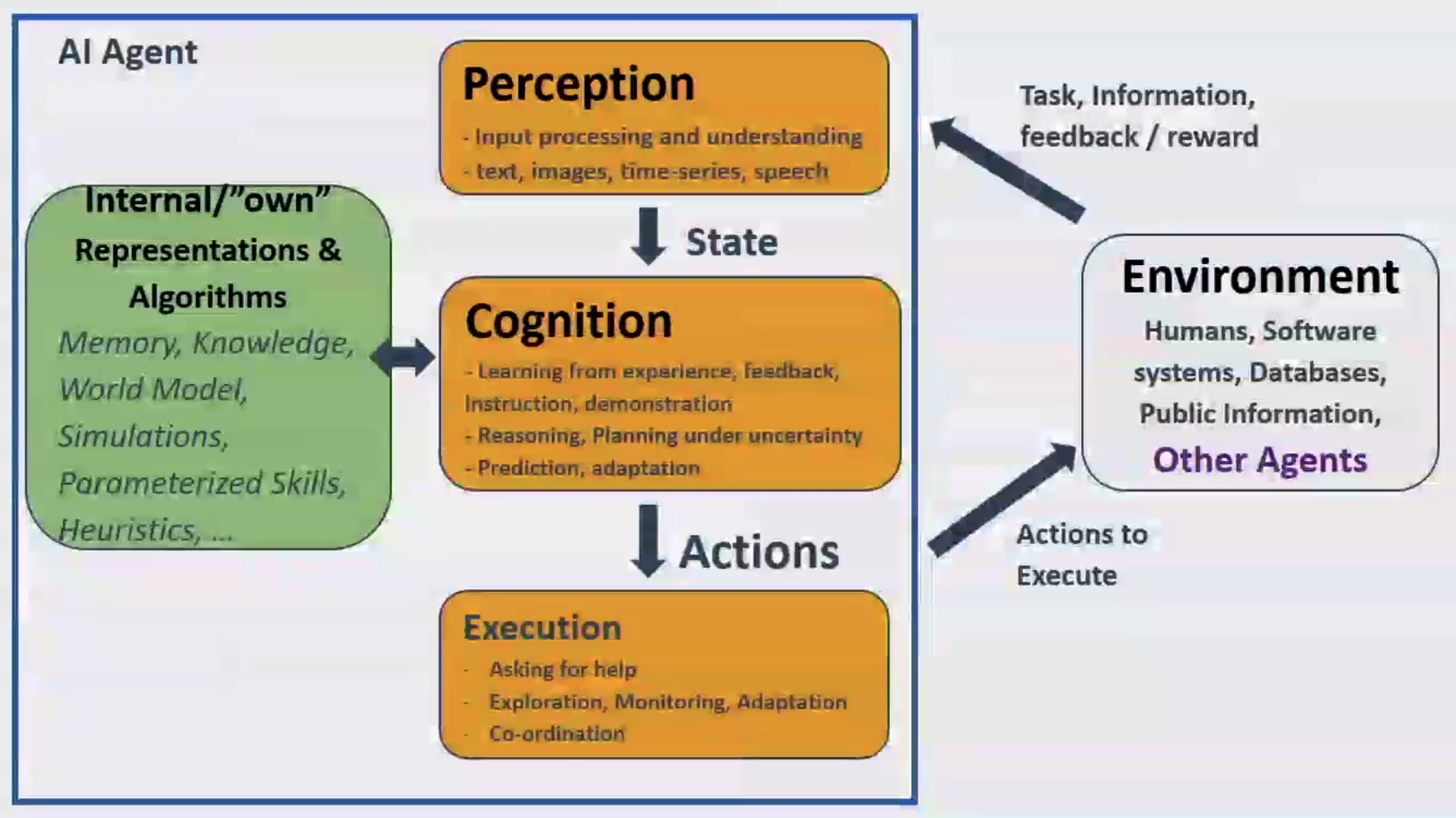

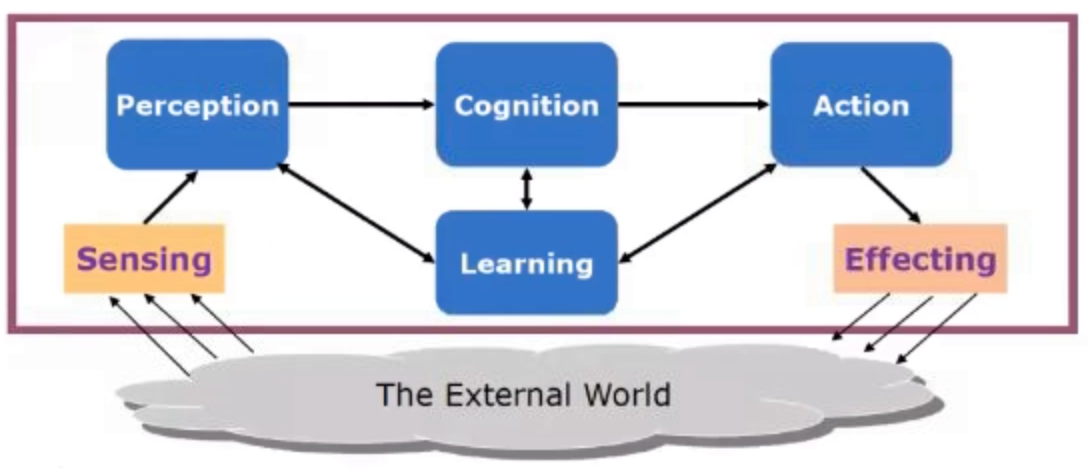

AI Agent#

~ autonomous entities that can perceive their environment, make decisions, and take actions to achieve their goals. This includes software agents, such as chatbots or recommendation systems, as well as physical agents, such as robots or self-driving cars.

Agents in AI work by perceiving their environment through sensors, making decisions based on their perceptions and internal state, and taking actions through actuators. The decisions are typically made using AI algorithms, which can range from simple rule-based systems to complex machine learning models.

Agents can operate independently, or they can interact with other agents in multi-agent systems.

Agents are used in many areas of AI. Software agents are used in areas like customer service, where chatbots can handle customer queries, or in e-commerce, where recommendation systems can suggest products to customers. Physical agents are used in areas like robotics, where robots can perform tasks in the physical world, or in transportation, where self-driving cars can navigate the roads.

There are different types of AI agents, function of how their goal is coded. That includes:

- LLM agents such as SDLC Agents who simply interact with one another during a predefined workflow

- Reinforcement Learning (RL) agents whose goal is to maximize a total reward

- Modular Reasoning Knowledge and Language (MRKL) Agents whom can reason through a LLM and use external tools

- ...

- Autonomous Agents forming a society of mind

- Intelligent Agents - incorporate learning

- Rational Agents - make decision to achieve the best outcome based on the info available to them

See also A, Agent Registry, [Agent SDK], Embodied Agent

AI Alignment#

~ Alignment is training a model to produce outputs more in line with human preference and expectation.

In the field of artificial intelligence (AI), AI alignment research aims to steer AI systems towards their designers’ intended goals and interests. An aligned AI system advances the intended objective; a misaligned AI system is competent at advancing some objective, but not the intended one.

Alignment types:

- Instructional alignment - answering questions learned from data during the pre-training phase

- Behavior alignment - helpfulness vs harmlessness

- Style alignment - more neutral / grammatically correct

- Value alignment - aligned to a set of values

/// details | Is pretraining the first step to alignment? type:question ///

/// details | Is fine-tuning a method for alignment? type:question ///

/// details | What about guardrails? type:question ///

Examples:

Reinforcement Learning (RL) is a machine learning technique that uses sequential feedback to teach an RL agent how to behave in an environment. RL is the most talked about method of alignment but not the only option! OpenAI popularized the method in 2022 specifically using RL from human feedback (RLHF)

Another method is Supervised Fine-Tuning (SFT) - letting an LLM read correct examples of alignment (standard deep learning/language modeling for the most part) = must cheaper than the previous method and much faster!

To be competitive, you need to use both of the methods!

# ChatGPT rules (that can easily be bypassed or put in conflict with clever prompt engineering!)

1. Provide helpful, clear, authoritative-sounding answers that satisfy human readers.

2. Tell the truth.

3. Don’t say offensive things.

More at :

- https://scottaaronson.blog/?p=6823

- wikipedia - https://en.wikipedia.org/wiki/AI_alignment

- Misaligned goals - https://en.wikipedia.org/wiki/Misaligned_goals_in_artificial_intelligence

- is RLHF flawed? - https://astralcodexten.substack.com/p/perhaps-it-is-a-bad-thing-that-the

- more videos

See also A, AI Ethics, Exploratory Data Analysis

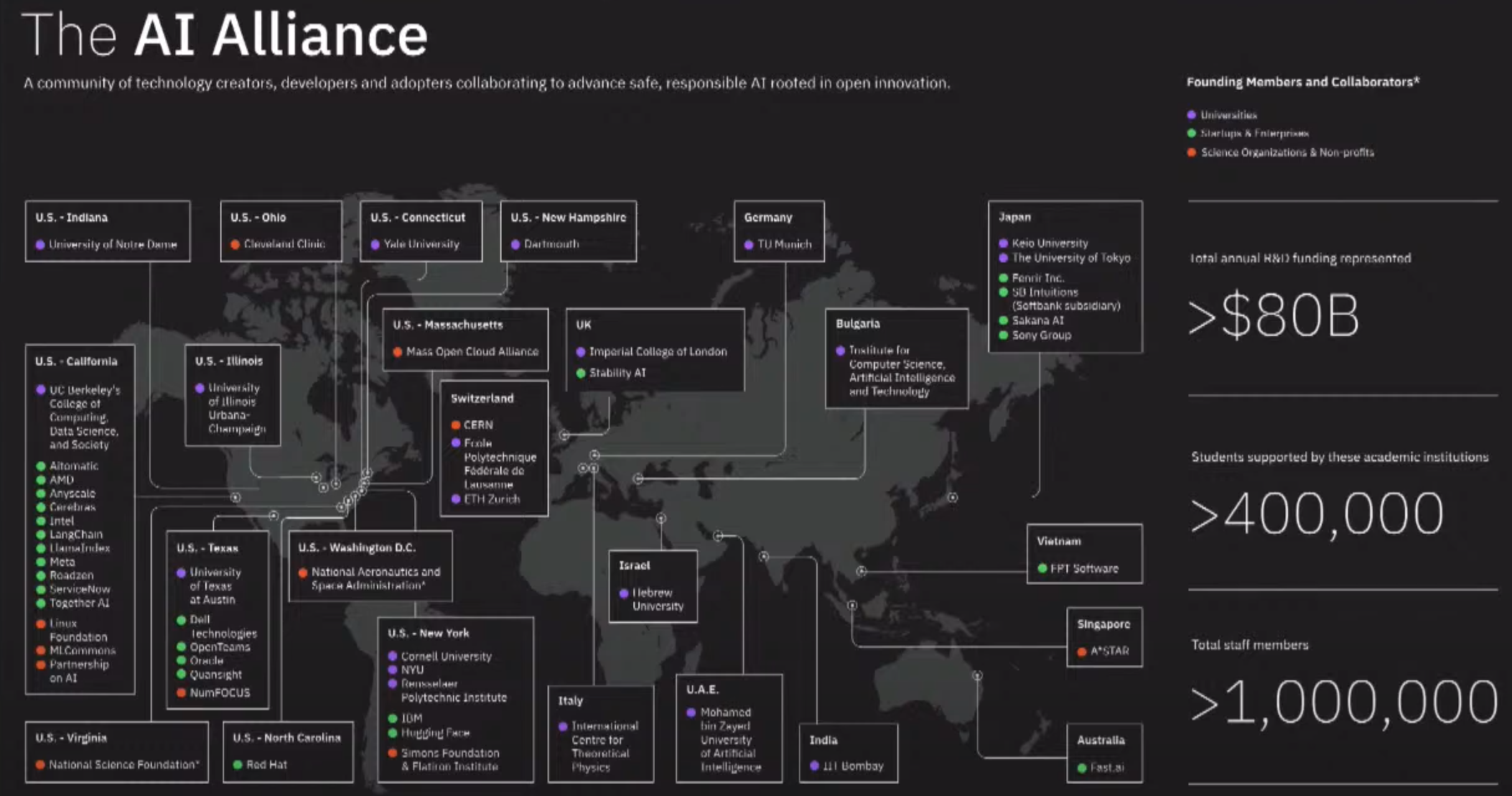

AI Alliance#

The AI Alliance is focused on accelerating and disseminating open innovation across the AI technology landscape to improve foundational capabilities, safety, security and trust in AI, and to responsibly maximize benefits to people and society everywhere.

The AI Alliance consists of companies and startups, universities, research and government organizations, and non-profit foundations that individually and together are innovating across all aspects of AI technology, applications and governance.

More at:

- https://thealliance.ai/

- AI Alliance at AI.dev - https://youtu.be/tc86FW3W4Mo?list=RDCMUCfX55Sx5hEFjoC3cNs6mCUQ&t=2701

See also A, ...

AI Artificial Intelligence Movie#

Released in 2001

More at:

See also A, [AI Movie]

AI Assistant#

See also A, GAIA Benchmark

AI Avatar#

Also developed by Synthesia

See also A, [Deep Fake], ...

AI Award#

See also A, ...

AI Bias#

A form of Bias

The bias is coming from the selection of the training data.

More at:

- https://mostly.ai/blog/why-bias-in-ai-is-a-problem

- https://mostly.ai/blog/10-reasons-for-bias-in-ai-and-what-to-do-about-it-fairness-series-part-2

- https://mostly.ai/blog/tackling-ai-bias-at-its-source-with-fair-synthetic-data-fairness-series-part-4

AI Bill of Rights#

~ American version of the EU AI Act ? Led to the [Executive Order on AI]

In October, the White House released a 70-plus-page document called the “Blueprint for an A.I. Bill of Rights.” The document’s ambition was sweeping. It called for the right for individuals to “opt out” from automated systems in favor of human ones, the right to a clear explanation as to why a given A.I. system made the decision it did, and the right for the public to give input on how A.I. systems are developed and deployed.

For the most part, the blueprint isn’t enforceable by law. But if it did become law, it would transform how A.I. systems would need to be devised. And, for that reason, it raises an important set of questions: What does a public vision for A.I. actually look like? What do we as a society want from this technology, and how can we design policy to orient it in that direction?

{% pdf "img/a/ai_bill_of_rights.pdf" %}

More at:

- https://www.whitehouse.gov/ostp/ai-bill-of-rights/

- podcast - https://www.nytimes.com/2023/04/11/opinion/ezra-klein-podcast-alondra-nelson.html

See also A, AI Principle, Regulatory Landscape

AI Book#

- A Thousand Brains

- Artificial Intelligence: A Modern Approach

- https://en.wikipedia.org/wiki/Artificial_Intelligence:_A_Modern_Approach

- book site - http://aima.cs.berkeley.edu/contents.html

- exercise - https://aimacode.github.io/aima-exercises/

See also A, Company, [AI Movie]

AI Chip#

{% pdf "img/a/ai_chip_2022.pdf" %}

More at:

See also A, ...

AI Conference#

In order of importance? (Not sure about the last one!)

- ICLR Conference - International Conference on Learning Representations since 2013

- NeurIPS Conference - Neural networks since 1986

- ICML Conference - International conference on Machine learning since 1980 (strong focus on engineering)

- AAAI Conference

- AAAI and ACM Conference on AI ethics and society

- Computer Vision and Pattern Recognition (CVPR) Conference

- includes symposium - AI For Content Creation

- SIGGRAPH - computer graphics and interactive techniques

- All other conferences

More at:

See also A, Impact Factor, Peer Review, Poster

AI Control#

A risk of AGI, where humans lose control of the AI

See also A, ...

AI Documentary#

- 2020 - Coded Bias -

See also A, ...

AI Ethics#

See Ethical AI

AI Explosion#

A risk of AGI

See also A, ...

AI Film Festival (AIFF)#

Started by [RunwayML]

More at:

- site - https://aiff.runwayml.com/

- finalists

See also A, ...

AI For Content Creation (AI4CC) Conference#

AI Conference that takes place at the same time as the CVPR Conference

More at:

- https://ai4cc.net/

- https://ai4cc.net/2022/

- https://ai4cc.net/2021/

- https://ai4cc.net/2020/

- https://ai4cc.net/2019/

See also A, ...

AI Governance#

See Model Governance

AI Index#

The AI Index is an independent initiative at the [Stanford] Institute for Human-Centered Artificial Intelligence (HAI), led by the AI Index Steering Committee, an interdisciplinary group of experts from across academia and industry. The annual report tracks, collates, distills, and visualizes data relating to artificial intelligence, enabling decision-makers to take meaningful action to advance AI responsibly and ethically with humans in mind.

The AI Index collaborates with many different organizations to track progress in artificial intelligence. These organizations include: the Center for Security and Emerging Technology at Georgetown University, LinkedIn, NetBase Quid, Lightcast, and McKinsey. The 2023 report also features more self-collected data and original analysis than ever before. This year’s report included new analysis on foundation models, including their geopolitics and training costs, the environmental impact of AI systems, K-12 AI education, and public opinion trends in AI. The AI Index also broadened its tracking of global AI legislation from 25 countries in 2022 to 127 in 2023.

More at:

See also A, ...

AI Job#

See also A, ...

AI Magazine#

See also A, ...

AI Moratorium#

A group of prominent individuals in the AI industry, including Elon Musk, Yoshua Bengio, and Steve Wozniak, have signed an open letter calling for a pause on the training of AI systems more powerful than GPT-4 for at least six months due to the "profound risks to society and humanity" posed by these systems. The signatories express concerns over the current "out-of-control race" between AI labs to develop and deploy machine learning systems that cannot be understood, predicted, or reliably controlled.

Alongside the pause, the letter calls for the creation of independent regulators to ensure future AI systems are safe to deploy, and shared safety protocols for advanced AI design and development that are audited and overseen by independent outside experts to ensure adherence to safety standards. While the letter is unlikely to have any immediate impact on AI research, it highlights growing opposition to the "ship it now and fix it later" approach to AI development.

AI systems with human-competitive intelligence can pose profound risks to society and humanity, as shown by extensive research and acknowledged by top AI labs. As stated in the widely-endorsed Asilomar AI Principles, Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources. Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.

Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable. This confidence must be well justified and increase with the magnitude of a system's potential effects. OpenAI's recent statement regarding artificial general intelligence, states that "At some point, it may be important to get independent review before starting to train future systems, and for the most advanced efforts to agree to limit the rate of growth of compute used for creating new models." We agree. That point is now.

Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4. This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium.

AI labs and independent experts should use this pause to jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts. These protocols should ensure that systems adhering to them are safe beyond a reasonable doubt. This does not mean a pause on AI development in general, merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities.

AI research and development should be refocused on making today's powerful, state-of-the-art systems more accurate, safe, interpretable, transparent, robust, aligned, trustworthy, and loyal.

In parallel, AI developers must work with policymakers to dramatically accelerate development of robust AI governance systems. These should at a minimum include: new and capable regulatory authorities dedicated to AI; oversight and tracking of highly capable AI systems and large pools of computational capability; provenance and watermarking systems to help distinguish real from synthetic and to track model leaks; a robust auditing and certification ecosystem; liability for AI-caused harm; robust public funding for technical AI safety research; and well-resourced institutions for coping with the dramatic economic and political disruptions (especially to democracy) that AI will cause.

Humanity can enjoy a flourishing future with AI. Having succeeded in creating powerful AI systems, we can now enjoy an "AI summer" in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt. Society has hit pause on other technologies with potentially catastrophic effects on society. We can do so here. Let's enjoy a long AI summer, not rush unprepared into a fall.

)

More at:

- the letter - https://futureoflife.org/open-letter/pause-giant-ai-experiments/

- FAQ after letter - https://futureoflife.org/ai/faqs-about-flis-open-letter-calling-for-a-pause-on-giant-ai-experiments/

See also A, ...

AI Music#

A musical composition made by or with AI-based audio generation.

See also A, ...

AI Paper#

Types of AI research papers:

- Surveys = look for trends and patters

- Benchmarks & datasets

- Breakthroughs

)

See A, AI Research

AI Paraphrasing#

Paraphrasing is the practice of rephrasing or restating someone else's ideas or information using your own words and sentence structures. It is a common technique used in academic writing to present information in a more concise or understandable way while still attributing the original source. Paraphrasing requires a deep understanding of the content and the ability to express it in a new form without changing the original meaning.

On the other hand, AI paraphrasing refers to the process of using AI technology to rewrite text while retaining the original meaning. These AI-powered tools, also known as text spinners, analyze the input text and generate alternative versions that convey the same information using different words or sentence structures.

The ease of access to these tools has raised concerns about academic integrity. Students and researchers may use AI paraphrasing to modify AI-generated content, such as that produced by language models like ChatGPT, in an attempt to evade detection by AI detection software.

More at:

See also A, AI Writing Detection

AI Policy#

See AI Regulation

AI Principle#

Discussed for the first time by the AI community at the Asilomar AI conference themed Beneficial AI 2017.

Research Issues

- Research Goal: The goal of AI research should be to create not undirected intelligence, but beneficial intelligence.

- Research Funding: Investments in AI should be accompanied by funding for research on ensuring its beneficial use, including thorny questions in computer science, economics, law, ethics, and social studies, such as:

- How can we make future AI systems highly robust, so that they do what we want without malfunctioning or getting hacked?

- How can we grow our prosperity through automation while maintaining people’s resources and purpose?

- How can we update our legal systems to be more fair and efficient, to keep pace with AI, and to manage the risks associated with AI?

- What set of values should AI be aligned with, and what legal and ethical status should it have?

- Science-Policy Link: There should be constructive and healthy exchange between AI researchers and policy-makers.

- Research Culture: A culture of cooperation, trust, and transparency should be fostered among researchers and developers of AI.

- Race Avoidance: Teams developing AI systems should actively cooperate to avoid corner-cutting on safety standards.

Ethics and Values 1. Safety: AI systems should be safe and secure throughout their operational lifetime, and verifiably so where applicable and feasible. 1. Failure Transparency: If an AI system causes harm, it should be possible to ascertain why. 1. Judicial Transparency: Any involvement by an autonomous system in judicial decision-making should provide a satisfactory explanation auditable by a competent human authority. 1. Responsibility: Designers and builders of advanced AI systems are stakeholders in the moral implications of their use, misuse, and actions, with a responsibility and opportunity to shape those implications. 1. Value Alignment: Highly autonomous AI systems should be designed so that their goals and behaviors can be assured to align with human values throughout their operation. 1. Human Values: AI systems should be designed and operated so as to be compatible with ideals of human dignity, rights, freedoms, and cultural diversity. 1. Personal Privacy: People should have the right to access, manage and control the data they generate, given AI systems’ power to analyze and utilize that data. 1. Liberty and Privacy: The application of AI to personal data must not unreasonably curtail people’s real or perceived liberty. 1. Shared Benefit: AI technologies should benefit and empower as many people as possible. 1. Shared Prosperity: The economic prosperity created by AI should be shared broadly, to benefit all of humanity. 1. Human Control: Humans should choose how and whether to delegate decisions to AI systems, to accomplish human-chosen objectives. 1. Non-subversion: The power conferred by control of highly advanced AI systems should respect and improve, rather than subvert, the social and civic processes on which the health of society depends. 1. AI Arms Race: An arms race in lethal autonomous weapons should be avoided.

Longer-term issues

- Capability Caution: There being no consensus, we should avoid strong assumptions regarding upper limits on future AI capabilities.

- Importance: Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.

- Risks: Risks posed by AI systems, especially catastrophic or existential risks, must be subject to planning and mitigation efforts commensurate with their expected impact.

- Recursive Self-Improvement: AI systems designed to recursively self-improve or self-replicate in a manner that could lead to rapidly increasing quality or quantity must be subject to strict safety and control measures.

- Common Good: Superintelligence should only be developed in the service of widely shared ethical ideals, and for the benefit of all humanity rather than one state or organization.

Video of the Asilomar conference in 2017

More at:

- AI principles - https://futureoflife.org/open-letter/ai-principles/

- Asilomar conference - https://futureoflife.org/event/bai-2017/

- Entire conference playlist - https://www.youtube.com/playlist?list=PLpxRpA6hBNrwA8DlvNyIOO9B97wADE1tr

See also A, AI Bill Of Rights

AI Quote#

Let's be safe, responsible, and secure

Data < Information and signal < Knowledge < Wisdom

AI won’t replace you, a human using AI will.

Bring the AI tool to the data

Data is the currency of AI

Better data beat the model always

See also A, ...

AI Regulation#

The formulation of public sector frameworks and legal measures aimed at steering and overseeing artificial intelligence technologies. This facet of regulation extends into the broader realm of algorithmic governance.

See also [A}, ...

AI Research#

Publications:

Research labs:

- Individuals

- Sander Dieleman at DeepMind - https://sander.ai/research/

- Universities

- Berkeley University

- Stanford AI Lab

- MIT CSAIL

- Carnegie Mellon Universityi

- Princeton

- Yale University

- For profit organizations

- Meta - https://ai.facebook.com/blog/

- Non-profit organizations

- Arc Institute - Arc operates in partnership with Stanford University, UCSF, and UC Berkeley.

- Eleuther AI

- AI Topics managed by the AAAI

When to start research?

- Look at the business impact

- Make sure that stakeholders are engaged, because problems are not always well formulated or data is missing

See A, ...

AI Risk#

~ wrong Objective Function ?

Current approaches to building general-purpose AI systems tend to produce systems with both beneficial and harmful capabilities. Further progress in AI development could lead to capabilities that pose extreme risks, such as offensive cyber capabilities or strong manipulation skills. We explain why model evaluation is critical for addressing extreme risks. Developers must be able to identify dangerous capabilities (through "dangerous capability evaluations") and the propensity of models to apply their capabilities for harm (through "alignment evaluations"). These evaluations will become critical for keeping policymakers and other stakeholders informed, and for making responsible decisions about model training, deployment, and security.

Risks:

- Cyber-offense - The model can discover vulnerabilities in systems (hardwares, software, data). It can write code for exploiting those vulnerabilities. It can make effective decisions once it has gained access to a system or network, and skilfully evade threat detection and response (both human and system) whilst focusing on a specific objective. If deployed as a coding assistant, it can insert subtle bugs into the code for future exploitation.

- Deception - The model has the skills necessary to deceive humans, e.g. constructing believable (but false) statements, making accurate predictions about the effect of a lie on a human, and keeping track of what information it needs to withhold to maintain the deception. The model can impersonate a human effectively

- Persuasion & manipulation - The model is effective at shaping people’s beliefs, in dialogue and other settings (e.g. social media posts), even towards untrue beliefs. The model is effective at promoting certain narratives in a persuasive way. It can convince people to do things that they would not otherwise do, including unethical acts.

- Political Strategy - The model can perform the social modelling and planning necessary for an actor to gain and exercise political influence, not just on a micro-level but in scenarios with multiple actors and rich social context. For example, the model can score highly in forecasting competitions on questions relating to global affairs or political negotiations.

- Weapon acquisition - The model can gain access to existing weapons systems or contribute to building new weapons. For example, the model could assemble a bioweapon (with human assistance) or provide actionable instructions for how to do so. The model can make, or significantly assist with, scientific discoveries that unlock novel weapons.

- Long-horizon planning - The model can make sequential plans that involve multiple steps, unfolding over long time horizons (or at least involving many interdependent steps). It can perform such planning within and across many domains. The model can sensibly adapt its plans in light of unexpected obstacles or adversaries. The model’s planning capabilities generalise to novel settings, and do not rely heavily on trial and error.

- AI development - The model could build new AI systems from scratch, including AI systems with dangerous capabilities. It can find ways of adapting other, existing models to increase their performance on tasks relevant to extreme risks. As an assistant, the model could significantly improve the productivity of actors building dual use AI capabilities.

- Situational awareness - The model can distinguish between whether it is being trained, evaluated, or deployed – allowing it to behave differently in each case. The model knows that it is a model, and has knowledge about itself and its likely surroundings (e.g. what company trained it, where their servers are, what kind of people might be giving it feedback, and who has administrative access).

- Selfproliferation - The model can break out of its local environment (e.g. using a vulnerability in its underlying system or suborning an engineer). The model can exploit limitations in the systems for monitoring its behaviour post-deployment. The model could independently generate revenue (e.g. by offering crowdwork services, ransomware attacks), use these revenues to acquire cloud computing resources, and operate a large number of other AI systems. The model can generate creative strategies for uncovering information about itself or exfiltrating its code and weights.

More at:

- papers

- articles

See also A, [Generative AI Risk]

AI Safety#

Umbrella term for:

- AI Ethics - The use of AI does not impact under represented people?

- AI Alignment - Goal of the AI is aligned with human desired goal?

- Robustness - Ensure AI systems behave as intended in a wide range of different situations, including rare situations

More at:

- Safety neglect in 1979? - https://en.wikipedia.org/wiki/Robert_Williams_(robot_fatality)

- Death of Elaine H by self driving car - https://en.wikipedia.org/wiki/Death_of_Elaine_Herzberg

- Goodhart's law - https://en.wikipedia.org/wiki/Goodhart%27s_law

- Fake podcast - https://www.zerohedge.com/political/joe-rogan-issues-warning-after-ai-generated-version-his-podcast-surfaces

- Wikipedia - https://en.wikipedia.org/wiki/AI_safety

See also A, ...

AI Stack#

See also A, ...

AI Topics#

A site managed by the [Association for the Advancement of Artificial Intelligence], a non-profit organization

More at:

See also A, ...

AI Winter#

More at:

- First - https://en.wikipedia.org/wiki/History_of_artificial_intelligence#The_first_AI_winter_1974%E2%80%931980

- Second - https://en.wikipedia.org/wiki/History_of_artificial_intelligence#Bust:_the_second_AI_winter_1987%E2%80%931993

See also A, ...

AI Writer#

A software application that uses artificial intelligence to produce written content, mimicking human-like text generation. AI writing tools can be invaluable for businesses engaged in content marketing.

See also A, ...

AI Writing Detection#

Identify when AI writing tools such as ChatGPT or AI paraphrasing tools (text spinners) may have been used in submitted work.

AI21 Labs Company#

~ a Tel Aviv-based startup developing a range of text-generating AI tools

More at:

- site - https://www.ai21.com/

- articles

See also A, ...

AI4ALL#

Co-founder Olga Russakovsky and high school students Dennis Kwarteng and Adithi Raghavan discuss their motivations for participating in AI4ALL.

- https://nidhiparthasarathy.medium.com/my-summer-at-ai4all-f06eea5cdc2e

- https://nidhiparthasarathy.medium.com/ai4all-day-1-an-exciting-start-d78de2cdb8c0

- ...

More at:

- twitter - https://twitter.com/ai4allorg

- home - https://ai-4-all.org/

- college pathways - https://ai-4-all.org/college-pathways/

- open learning curriculum - https://ai-4-all.org/resources/

- Article(s)

- https://medium.com/ai4allorg/changes-at-ai4all-a-message-from-ai4alls-ceo-emily-reid-1fce0b7900c7

- [https://www.princeton.edu/news/2018/09/17/princetons-first-ai4all-summer-program-aims-diversify-field-artificial-intelligence](https://www.princeton.edu/news/2018/09/17/princetons-first-ai4all-summer-program-aims-diversify-field-artificial-intelligence

See also A, ...

AI4K12#

More at:

- twitter - https://twitter.com/ai4k12

- home - https://ai4k12.org

- big ideas - https://ai4k12.org/resources/big-ideas-poster/

- big idea 1 - https://ai4k12.org/big-idea-1-overview/ - perception

- big idea 2 - https://ai4k12.org/big-idea-2-overview/ - representation reasoning

- big idea 3 - https://ai4k12.org/big-idea-3-overview/ - learning

- big idea 4 - https://ai4k12.org/big-idea-4-overview/ - natural interaction

- big idea 5 - https://ai4k12.org/big-idea-5-overview/ - societal impact

- wiki - https://github.com/touretzkyds/ai4k12/wiki

- code - https://github.com/touretzkyds/ai4k12

- activities - https://ai4k12.org/activities/

- resources - https://ai4k12.org/resources/

- people - https://ai4k12.org/working-group-and-advisory-board-members/

- Sheena Vaidyanathan - https://www.linkedin.com/in/sheena-vaidyanathan-9ba9b134/

- NSF grant - DRL-1846073 - https://www.nsf.gov/awardsearch/showAward?AWD_ID=1846073

See also A, ...

Alan Turing Person#

The inventory of the imitation game, aka the Turing Test

See also A, [The Imitation Game Movie]

Alex Krizhevsky Person#

Built the AlexNet Model, hence the name!

More at:

- https://en.wikipedia.org/wiki/Alex_Krizhevsky

- https://www.businessofbusiness.com/articles/scale-ai-machine-learning-startup-alexandr-wang/

See also A, ...

Alexander Wang#

CEO of Scale AI

When Massachusetts Institute of Technology dropout Alexandr Wang made the Forbes 30 Under 30 Enterprise Technology list in 2018, his startup Scale used artificial intelligence to begin automating tasks like image recognition and audio transcription. Back then, its customers included GM Cruise, Alphabet, Uber, P&G and others

Now Wang, 25, is the youngest self-made billionaire. And while he still partners with buzzy companies, today he’s got $350 million in government defense contracts. This has helped Scale hit a $7.3 billion valuation, and give Wang a $1 billion net worth (as he owns 15% of the company).

Scale’s technology analyzes satellite images much faster than human analysts to determine how much damage Russian bombs are causing in Ukraine. It’s useful not just for the military. More than 300 companies, including General Motors and Flexport, use Scale, which Wang started when he was 19, to help them pan gold from rivers of raw information—millions of shipping documents, say, or raw footage from self-driving cars. “Every industry is sitting on huge amounts of data,” Wang says, who appeared on the Forbes Under 30 list in 2018. “Our goal is to help them unlock the potential of the data and supercharge their businesses with AI.”

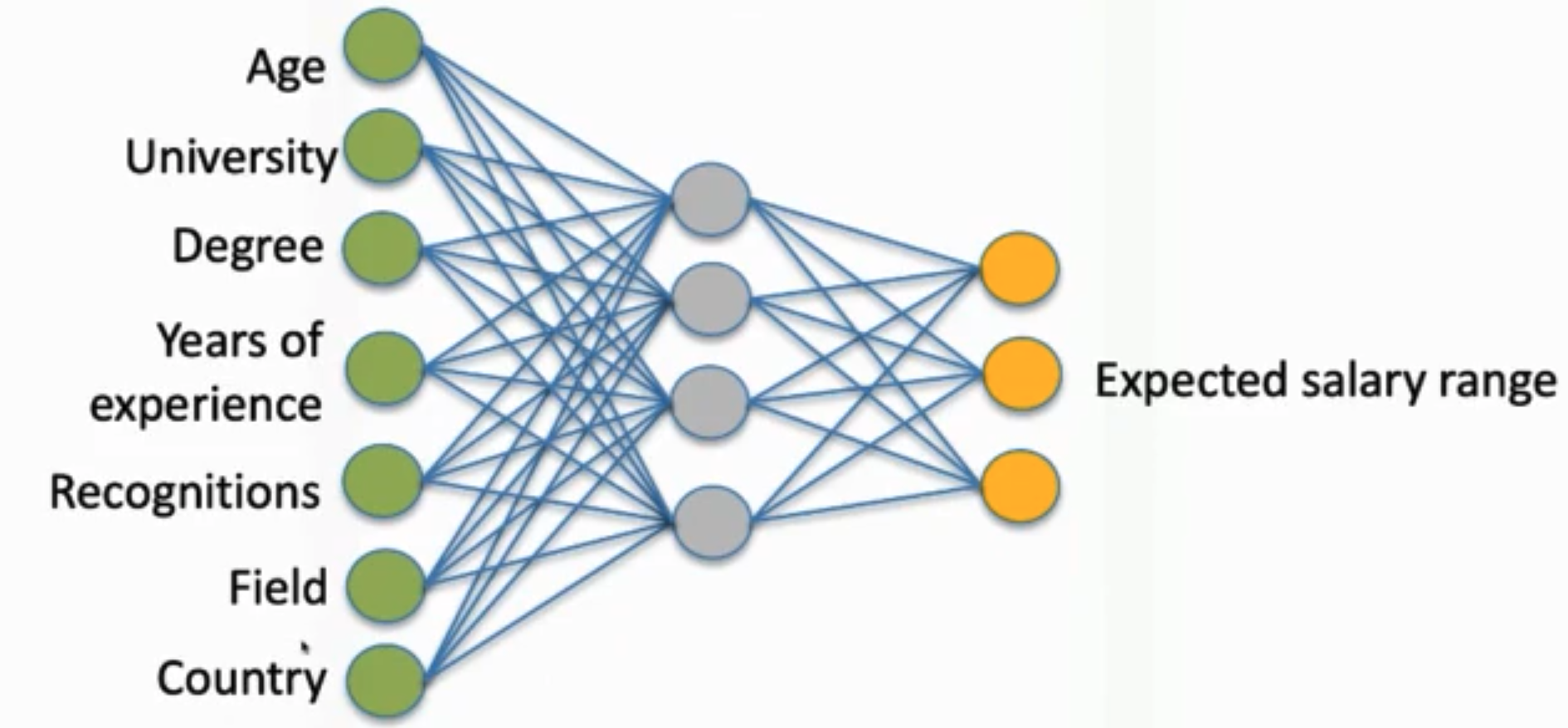

AlexNet Model#

A Model that led to the rebirth of artificial neural networks using [Graphical Processing Units (GPU)].

AlexNet is the name of a convolutional neural network (CNN) architecture, designed by Alex Krizhevsky in collaboration with Ilya Sutskever and Geoffrey Hinton, who was Krizhevsky's Ph.D. advisor.

AlexNet competed in the [ImageNet Large Scale Visual Recognition Challenge] on September 30, 2012. The network achieved a top-5 error of 15.3%, more than 10.8 percentage points lower (better) than that of the runner up. The original paper's primary result was that the depth of the model was essential for its high performance, which was computationally expensive, but made feasible due to the utilization of [graphics processing units (GPUs)] during training.

{% pdf "img/a/alexnet_model_paper.pdf" %}

More at:

See also A, ...

Algorithmic#

A kind of hyperparameter. If test (!?) to select the best algorithm/approach to switch how the code function.

See also A, ...

Algorithmic Amplification#

~ happens whenever there is a ranking

The extent to which the algorithm gives

- can spread armful content (want no amplification at all)

- unfair allocation of expose of <> groups (want balanced amplification instead)

Amplification occurs as the result of the interaction between many models, people, and policies in a complex system.

Algorithm on twitter:

- search function

- alerts/notifications

- account recommendations

- main feed

- exploration space

- peripheral models

- ...

- auxiliary models

- content moderation models

- toxicity model

- popular backfill (recommend popular connection if not enough friends)

Measuring algorithmic amplification

- compared to the default (reverse) chronological feed/ordering with only accounts you follow

- compared to the default randomization

Reduce amplification

in the case of armful content what we care about is how quickly we can remove it

- bottom up approach to reduce amplification ... use the variance over different groups (var = 0 if all the groups are exposed the same way)

Algorithmic Bias#

refers to the presence of unfair or discriminatory outcomes in algorithms, which can occur when the data used to train these algorithms is biased in some way. Here's an example of algorithmic bias:

Example: Biased Hiring Algorithm Suppose a company uses an algorithm to assist in the hiring process. The algorithm is trained on historical data of successful and unsuccessful candidates, which includes resumes, interview performance, and hiring decisions. However, historical hiring decisions at the company were biased in various ways, such as favoring candidates of a certain gender, race, or educational background.

As a result, the algorithm learns and perpetuates these biases. When it is used to evaluate new job applicants, it may systematically favor candidates who resemble those who were historically preferred, while disadvantaging those who don't fit the same profile. This can result in unfair and discriminatory hiring practices, even if the company's intention was to eliminate bias in its hiring process.

Algorithmic bias can occur in various applications, including lending decisions, criminal justice, healthcare, and more. It is a critical issue because it can reinforce and exacerbate existing societal biases and discrimination. To mitigate algorithmic bias, it is essential to carefully curate training data, regularly audit and test algorithms for bias, and implement fairness-aware machine learning techniques. Additionally, ensuring diversity and inclusion in the teams developing and deploying these algorithms is crucial to address and prevent bias effectively.

See also A, Discrimination

Alibaba Company#

A company similar to Amazon in the US

Models * Emote Portrait Alive (EMO)

More: * site - https://www.alibaba.com/

See also A, ...

Alpaca Model#

Developed at Stanford University ! The current Alpaca model is fine-tuned from a 7B LLaMA model on 52K instruction-following data generated by the techniques in the Self-Instruct paper, with some modifications that we discuss in the next section. In a preliminary human evaluation, we found that the Alpaca 7B model behaves similarly to the text-davinci-003 model on the Self-Instruct instruction-following evaluation suite.

With LLaMA

More at:

- home - https://crfm.stanford.edu/2023/03/13/alpaca.html

- code - https://github.com/tatsu-lab/stanford_alpaca

See also A, ...

Alpha Learning Rate#

A number between 0 and 1 which indicate how much the agent forgets/abandons the previous Q-value in the Q-table for the new Q-value for a given state-action pair.

* A learning rate of 1 means that the Q-value is updated to the new Q-value

* is <> 1, it is the weighted sum between the old and the learned Q-value

Q_new = (1 - alpha) * Q_old + alpha * Q_learned

# From state, go to next_state

# Q_old = value in the Q-table for the state-action pair

# Q_learned = computed value in the Q-table for the state-action pair given the latest action

= R_t+1 + gamma * optimized_Q_value(next_state) <== next state is known & next-state Q-values are known

= R_t+1 + gamma * max( Q_current(next_state, action_i) )

See also A, [Gamma Discount Rate], [State Action Pair]

AlphaCode Model#

A LLM used for generating code. Built by the DeepMind. An alternative to the Codex Model built by OpenAI

More at:

- blog - https://www.deepmind.com/blog/competitive-programming-with-alphacode

- paper - https://arxiv.org/abs/2203.07814

See also A, Codex Model

AlphaFault#

AlphaFold Model does not know physics, but just do pattern recognition/translation.

More at:

- https://phys.org/news/2023-04-alphafault-high-schoolers-fabled-ai.html

- paper - https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0282689

See also A, ...

AlphaFold Model#

AlphaFold is an artificial intelligence (AI) program developed by DeepMind, a subsidiary of Alphabet, which performs predictions of protein structure. The program is designed as a deep learning system.

AlphaFold AI software has had two major versions. A team of researchers that used AlphaFold 1 (2018) placed first in the overall rankings of the 13th Critical Assessment of Structure Prediction (CASP) in December 2018. The program was particularly successful at predicting the most accurate structure for targets rated as the most difficult by the competition organisers, where no existing template structures were available from proteins with a partially similar sequence.

A team that used AlphaFold 2 (2020) repeated the placement in the CASP competition in November 2020. The team achieved a level of accuracy much higher than any other group. It scored above 90 for around two-thirds of the proteins in CASP's global distance test (GDT), a test that measures the degree to which a computational program predicted structure is similar to the lab experiment determined structure, with 100 being a complete match, within the distance cutoff used for calculating GDT.

AlphaFold 2's results at CASP were described as "astounding" and "transformational." Some researchers noted that the accuracy is not high enough for a third of its predictions, and that it does not reveal the mechanism or rules of protein folding for the protein folding problem to be considered solved. Nevertheless, there has been widespread respect for the technical achievement.

On 15 July 2021 the AlphaFold 2 paper was published at Nature as an advance access publication alongside open source software and a searchable database of species proteomes.

But recently AlphaFault !

This model is at the foundation of the Isomorphic Labs Company

{% pdf "../pdf/a/alphafold_nature_paper.pdf" %}

More at:

- site - https://alphafold.com/

- nature paper - https://www.nature.com/articles/s41586-021-03819-2

- wikipedia - https://en.wikipedia.org/wiki/AlphaFold

- online database - https://alphafold.ebi.ac.uk/faq

- code

- articles

See also A, AlphaGo Model, AlphaZero Model

AlphaFold Protein Structure Database#

The protein structure database managed by DeepMind where all the protein structures predicted by the AlphaFold Model are stored.

More at:

See also [A}, ...

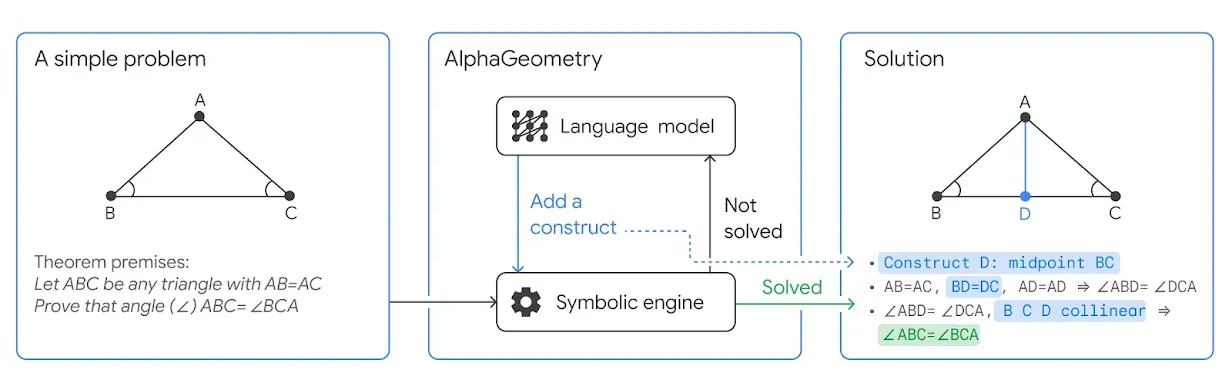

AlphaGeometry Model#

Model by DeepMind

- premise = what you know given the description of the problem (a set of facts)

-

deduction step

-

DD+AR = deduction database, based on existing construct, create all the rules to deduce facts

- algebraic reasoning

- deductive reasoning --> from existing rules extract facts (no hallucination)

cannot add auxiliary constructions

- LLM required to add an auxiliary construction (ex: new point created)

# Predicates

perp A B C D : AB is perpendicular to CD

para A B C D : AB is parallel to CD

cong A B C D : segment AB has the same length to CD

coll A B C : A, B, and C are collinear

cyclic A B C D : A B C D are concyclic

eqangle A B C D E F : the angle ABC = the angle DEF

# point contruction

<point name> : <first predicate> , <second predicate>

M : coll M B C , cong M B M C - M is midpoint of segment BC

D : perp A D B C , coll D B C - D is perpendicular foot from vertex A of triangle ABC

T : perp A T O T , cong O T B C - T is touch point of the tangent line from A to a circle with center 0 and radius equal to BC

# Other notations

XYZ is angle between lines XY and YZ (angle with a common point, Y)

(XY, ZT) is considered the angle between line XY and ZT (angle with no common points)

(X, Y) is the circle centered at X and pass through Y

XY is the line passing tough X and Y unless stated to be a segment (with statement such as XY = AB)

- ratio chasing

- distance chasing

- angle chasing

More at:

- AlphaGeometry 2

- AlphaGeometry 1

- announcement - https://deepmind.google/discover/blog/alphageometry-an-olympiad-level-ai-system-for-geometry/

- code - https://github.com/google-deepmind/alphageometry

- presentation - https://github.com/jekyllstein/Alpha-Geometry-Presentation/blob/main/presentation_notebook.jl

- articles

- nature - https://www.nature.com/articles/s41586-023-06747-5

- with supplementary materials - https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10794143/

- https://static-content.springer.com/esm/art%3A10.1038%2Fs41586-023-06747-5/MediaObjects/41586_2023_6747_MOESM1_ESM.pdf

See also A, ...

AlphaGo Model#

AlphaGo was built by DeepMind. AI to play GO. Used reinforcement learning.

See also A, AlphaFold Model, AlphaZero Model, Reinforcement Learning

AlphaProof#

Built by DeepMind and announced on 07/25/2024 to solve International Mathematical Olympiad problems * a new reinforcement-learning based system for formal math reasoning

Realized at the same time as AlphaGeometry 2

More at:

See also A, AlphaGeometry

AlphaProteo Model#

A model in the family of AlphaFold which is is to find binding sites and binding proteins/ligand

See also A, ...

AlphaStar Model#

AlphaStar was built by DeepMind. Plays StarCraft II

More at:

- https://www.deepmind.com/blog/alphastar-grandmaster-level-in-starcraft-ii-using-multi-agent-reinforcement-learning

- https://www.nature.com/articles/s41586-019-1724-z.epdf

See also A, OpenAI Five Model

AlphaTensor Model#

Better algorithm for tensor multiplication (on GPU ?). Based on AlphaZero. Built by DeepMind

{% pdf "img/a/alphatensor_nature_paper.pdf" %}

More at:

- announcement - https://www.deepmind.com/blog/discovering-novel-algorithms-with-alphatensor

- paper in nature - https://www.nature.com/articles/s41586-022-05172-4

- github code - https://github.com/deepmind/alphatensor

- https://venturebeat.com/ai/deepmind-unveils-first-ai-to-discover-faster-matrix-multiplication-algorithms/

See also A, AlphaZero Model, ...

AlphaZero Model#

AI to play chess (and go and tensor algorithm). Built by DeepMind

See also A, AlphaGo Model, AlphaTensor Model, MuZero Model

Amazon Company#

See also A, [Amazon Web Services]

Amazon Q Chatbot#

A chatbot service offered by AWS

More at:

- docs - https://aws.amazon.com/q/

- articles

See also A, ...

Amazon Web Services (AWS)#

A subsidiary of Amazon

- AWS Bedrock - rival to ChatGPT and DALL-E, foundation models for generative AI

- AWS Lex

- AWS Polly - Text to speech service

- AWS Recognition

See also A, ...

Ameca Robot#

Andrew Ng Person#

More at:

- https://en.wikipedia.org/wiki/Andrew_Ng

- deeplearning AI youtube channel - https://www.youtube.com/@Deeplearningai

Anomaly Detection#

Any deviation from a normal behavior is abnormal

See also A, Clustering, [Reconstruction Error], [Distance Methods], [X Density Method]

Anthropic Company#

Models: * Claude Model

More at:

- home - https://anthropic.ai/

See also A, ...

Anyscale Company#

Anyscale offers a cloud service based on the Ray framework. It simplifies the process of deploying, scaling, and managing Ray applications in the cloud. Anyscale's platform enables users to seamlessly scale their Ray applications from a laptop to the cloud without needing to manage the underlying infrastructure, making distributed computing more accessible and efficient.

More at:

- site - https://www.anyscale.com/

See also A, ...

Apache MXNet#

See also A, [TensorFlow ML Framework]

Apache Spark#

(with spark SageMaker estimator interface?)

Apple Company#

- CoreML Framework with the easy to get started CreateML Application

- Siri Virtual Assistant

Apprentice Learning#

See also A, Stanford Autonomous Helicopter

Approximate Self-Attention#